Hi everyone ![]()

I am using the ResNet18 for a Deep Learning project on CIFAR10. However, I want to pass the grayscale version of the CIFAR10 images to the ResNet18. When I change the expected number of input channels and change the number of classes from 1000 to 10 I get output shapes that I don’t understand. Here is my code:

from torchsummary import summary

import torchvision.models as PyTorchModels

r = PyTorchModels.resnet18()

num_in_channels = 1

num_out_channels = r.conv1.out_channels

size_kernel = r.conv1.kernel_size

num_in_features = r.fc.in_features

classes = 10

r.conv1 = nn.Conv2d(in_channels=num_in_channels, out_channels=num_out_channels, kernel_size=size_kernel)

r.fc = nn.Linear(in_features=num_in_features, out_features=classes)

model = r

summary(model.cuda(), (1, 32, 32))

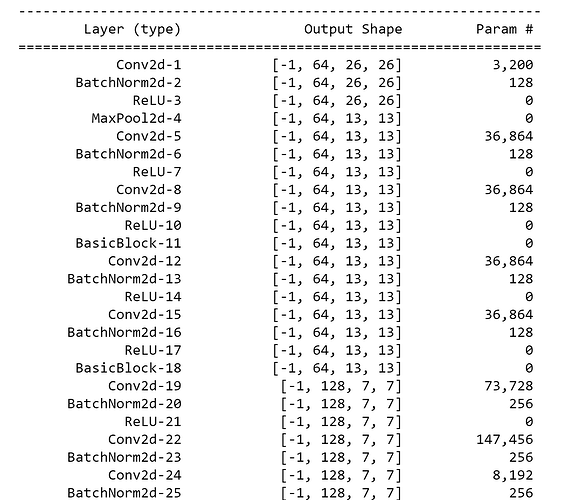

It produces the following output (only the first couple of layers are shown):

Why is the first output of shape [-1, 64, 26, 26]? Where does the 26 come from? I would expect it to be of shape [-1, 64, 16, 16]. More generally, how does the number of in_channels (e.g. RGB vs. grayscale) effect the output shape?

Any help is very much appreciated!

All the best

snowe