I have this problem althoght I reduced my batch size of dataset

RuntimeError: [enforce fail at …\c10\core\CPUAllocator.cpp:79] data. DefaultCPUAllocator: not enough memory: you tried to allocate 754974720 bytes.

I have this problem althoght I reduced my batch size of dataset

RuntimeError: [enforce fail at …\c10\core\CPUAllocator.cpp:79] data. DefaultCPUAllocator: not enough memory: you tried to allocate 754974720 bytes.

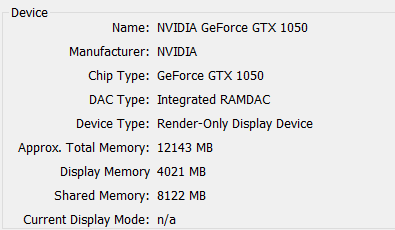

How much host RAM does your setup have? The posted number would correspond to 720MB, so it seems your RAM size is quite limited or you’ve already filled out the majority of it.

Because I run this model RED-WGAN/preprocessing.py at master · Deep-Imaging-Group/RED-WGAN · GitHub on CPU, I had 16gram , and because I had a huge data volume, and even though I had Nvidia GPU , I always have an error with data volume.

You could try to increase the swap, which would result in a significant slowdown, but you might be able to at least allocate the needed memory.

The better approach would most likely be to reduce the memory usage on this system, e.g. by lowering the batch size.

I’m unsure, if you are preloading the entire dataset, but you might want to lazily load each sample in this case.