i am training my model using pytorch , and i already added saving and resume training functionalities, but the problem when i want to override the step value on tensorboard , the graph missed up ( connecting last step to the current step )

ex: i am logging scalars to tensorboard every 10 iterations, and saving models every 100 iteration where iteration=step

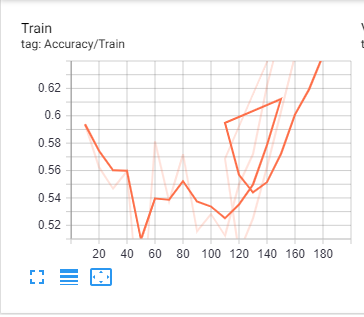

in image below , i trained model for 150 iteration (so i have 15 step point in tensorboard graph), and then resume training from 100 iteration model and start logging in step 100 , i was expected to override all step points after step 110 but it seems to connect the last step point (150) to step 110, how i can override all steps beyond step 100, and fix the graph ???

logging to tensorboard code:

if (OLD_ITERATIONS + i)%10 is 0 and i is not 0:

wirter.add_scalar(tag='Accuracy/Train', scalar_value=accs/10, global_step=OLD_ITERATIONS+i)

wirter.add_scalar(tag='Accuracy/Valid', scalar_value=val_accs/10, global_step=OLD_ITERATIONS+i)

wirter.add_scalar(tag='Loss/Train', scalar_value=losses/10, global_step=OLD_ITERATIONS+i)

wirter.add_scalar(tag='Loss/Valid', scalar_value=val_losses/10, global_step=OLD_ITERATIONS+i)

accs = 0

losses = 0

val_accs = 0

val_losses = 0