Hi there,

I am training an image classification network. When I train it for, say, 10 epochs, the train loss smoothly decreases as I would expect. However, when I save that model and load it on a different machine (but same hardware and software versions) and resume training for another 5 epochs, I get a massive peak in my train loss. If I load the same model on the same machine as the first training, the loss continues to decrease just smoothly.

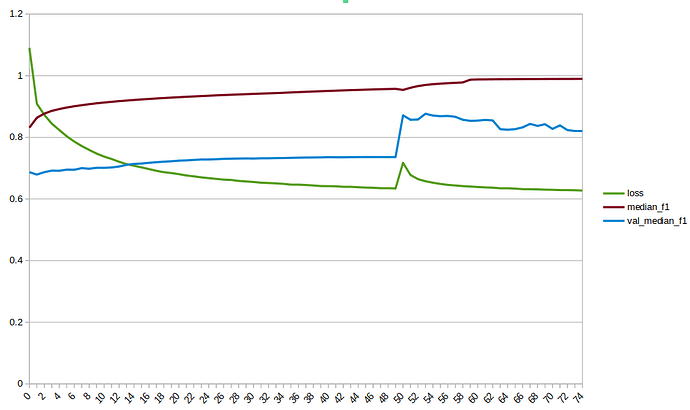

Mysteriously, the resulting networks (always) perform significantly better on validation and test data when stopped/resumed on a different machine! (Compared to letting it train for the same number of epochs on one machine). This can be seen in the image below where I trained 50 epochs and resumed for another 25 epochs on another machine. Training loss returns to approx where it was but validation quality increases a lot!

Any ideas what could cause this? (And how to do this on purpose)

Details:

- I set torch.backends.cudnn.benchmark = False and torch.use_deterministic_algorithms(True) and set the env “CUBLAS_WORKSPACE_CONFIG”: “:4096:8”

- I did not set seeds, but do not think that missing seeding explains different behavior on different machines

- Pytorch versions are equal (same docker image)

- Resuming on same machines invokes the same code on a fresh docker container just as when resuming on the other machine