While fine-tuning a decoder only LLM like LLaMA on chat dataset, what kind of padding should one use?

Many papers use Left Padding, but is right padding wrong since transformers gives the following warning if using right padding " A decoder-only architecture is being used, but right-padding was detected! For correct generation results, please set padding_side='left' when initializing the tokenizer."

I would simply assume the model was pretrained with left-padded input, so it seems reasonable to adhere to it for fine-tuning.

Left-padding feels also more intuitive ![]()

Thanks for the reply, foundational models like LLaMA are usually trained without padding.

It is mostly used during finetuning, also can you please tell why left padding feels more intuitive? Thanks!

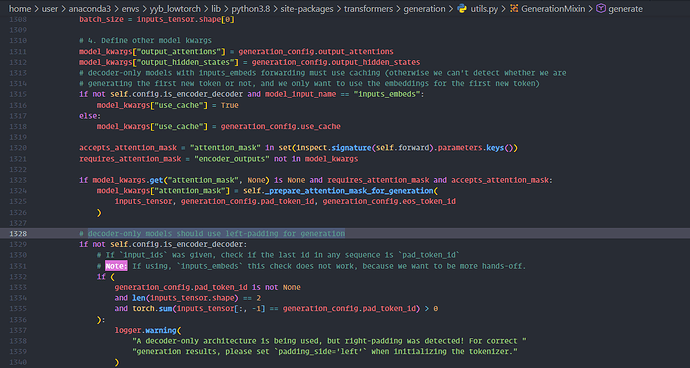

I’m concerned about this as well, and the generate() method in the transformers library explicitly suggests that decoder-only models should use the left padding method. I would also like to know the reason for this

I asked the same question on Stack overflow and got a good answer.

transformer - While fine-tuning a decoder only LLM like LLaMA on chat dataset, what kind of padding should one use? - Artificial Intelligence Stack Exchange

I have been thinking about this for quite some time. How do they manage to train without padding if they train with batch of variable length observations?. Or maybe, they just chunk the whole text corpus into size of equal lengths and train like that. In that setup, there is no longer the problem of variable input length. However, is this a correct approach? What if the semantically related sentence ended up in the other batch?

It is the latter, for pre-training LLMs they collect a large corpus and as you said, divide it into segments of equal length. This can bundle two unrelated paragraphs together but when because the models are trained on trillions of tokens it doesn’t affect the model much. Also, there is the other option of masking out the unrelated text.

It’s my understanding that during pre-training, the corpus is “packed” into examples of the same length and for that reason non-instruct models like Llama-3.2-1B do not need a pad token. You can verify this by checking that tokenizer.pad_token is None.

The key question is, what do we do when we want to instruction fine-tune this model? Several threads/conversations I have read online suggest doing this:

tokenizer = AutoTokenizer.from_pretrained(

"meta-llama/Llama-3.2-1B",

chat_template=chat_template,

padding_side="left"

)

if tokenizer.pad_token is None:

tokenizer.pad_token = "<|finetune_right_pad_id|>"

tokenizer.add_special_tokens({'pad_token': "<|finetune_right_pad_id|>"})

that is, setting the pad token as one of several already available special tokens. In particular, rather than using the reserved ones, we can use the "<|finetune_right_pad_id|>". They also suggest padding on the left, meaning that the text will look as follows:

<bos><pad><pad>{{text}}

It’s unclear to me why this is needed. Isn’t it the case that if EOS tokens were on the right, e.g.

<bos>{{text}}<eos><eos>

then the model would just stop at the first EOS?