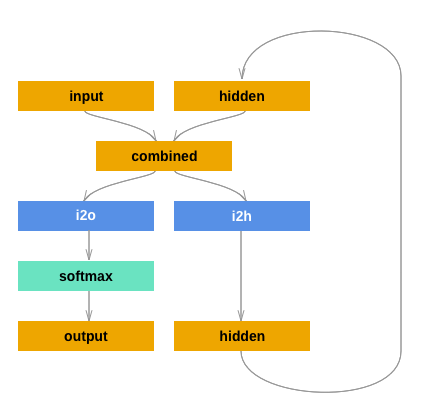

Hello I am really new in pytorch. I really like Pytorch because it so helpful and giving a good tutorial, however sometimes it not in complete form. Last Time I have try to learn RNN and it’s variant like LSTM, and GRU. I follow this tutorial NLP From Scratch: Classifying Names with a Character-Level RNN — PyTorch Tutorials 2.2.0+cu121 documentation . I see the graph like this ![]()

It is the graph of RNN. Where I can find similar guide to implement LSTM and GRU in pytorch?

Is there any suggestion where I can start to learn “Stacked LSTM” in pytorch?

Is it possible to continue the pytorch tutorial completely?, since it looks like never been updated in the long time.

-Thank you-

As pytorch is a library for efficient creation of models and so they provide built in classes for lstm and gru for you. No need to create yourself but as always you can always create your own custom class for them. The code and formulas for lstm and gru classes can be found here: https://github.com/pytorch/pytorch/blob/master/torch/nn/modules/rnn.py

I also have some sample code here.

This blog also provides a great breakdown of lstm networks: http://colah.github.io/posts/2015-08-Understanding-LSTMs/

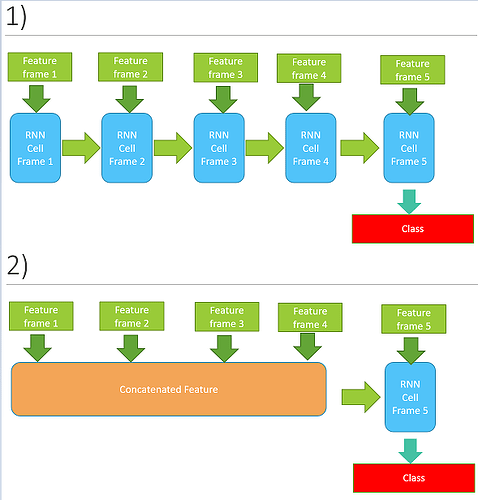

Thank you for Reply, based on the tutorial links, I want to use that as video classification. Which of the picture is true?

My immediate reaction is, obviously the first one, since you have a sequence.

However, if you’re always using eaxctly four frames, you can actually do either, and see which works well for you.

The second version will run faster, since more easily parallelizable. The first one invokes Amdahls law, and is slow-tastic.

The second one as your doing video is the general format for sequences

first one would be the nn.LSTMCELL

second one is layer nn.LSTM

Anyone know in here how to implement stacked LSTM ?

HI, num_layers argument stacks LSTM layers