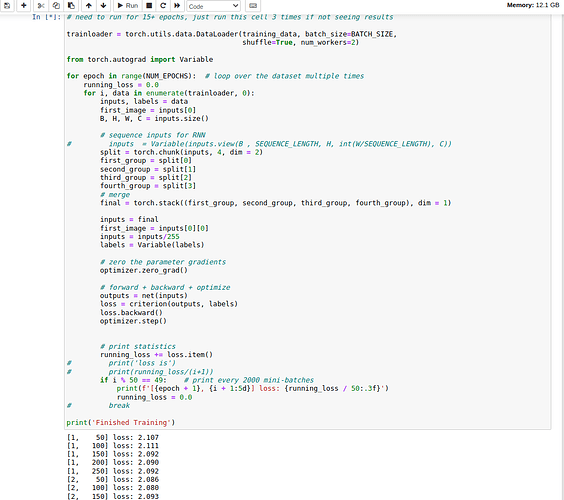

I’m having issues training an RNN on some spectograms on 1 second audio clips. The spectrograms are of shape 271 * 781 * 4, with the 781 being the width (So the time stamp). I turned this to 271 * 780 * 4 so I can divide each data into groups of 4 to feed into the RNN. Currently, I’m having issues getting loss to go down. I’ve debugged an issue where my data wasn’t being broken into groups of 4 properly (it wasn’t being divided by time step), I’ve fixed that so I’m certain that is no longer the issue.

I’m thinking that there are probably issues with my RNN architecture code and my forward, but I"m not too sure how to figure it out. I’ve googled around and haven’t gotten too far (my RNN knowledge in the practical sense isn’t great, but I understand the idea of feeding the hidden output from the previous layer into the next layer in conjunction with the next ‘section’ of the data).

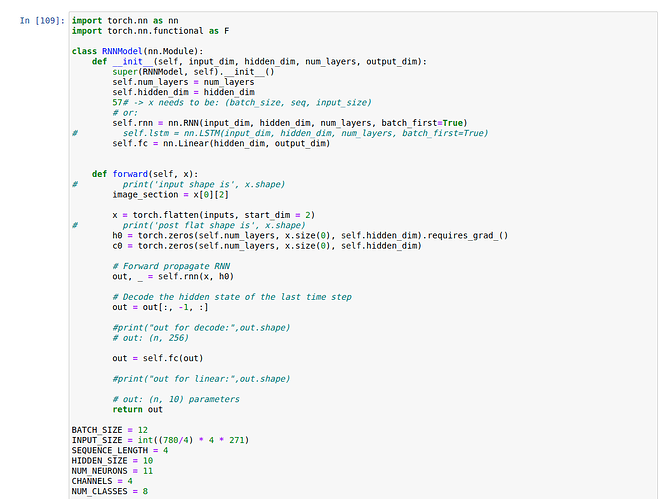

Here is my Model Architecture

I made this account so I can only upload 1 image, will try to upload the other code in the comments