Hi, I am trying to train an RNN on 1 million sentences (actually genomic reads), each of length = 100 characters.

I want to use bptt = 3, that is, I will break down each sentence into overlapping sequences of length 3 with stride 1.

Let us say, I am using a batch size of 5, that means for each batch, there will be 5 independent forward passes taking place (please correct me if I am wrong)

I want to know if what I am doing is correct

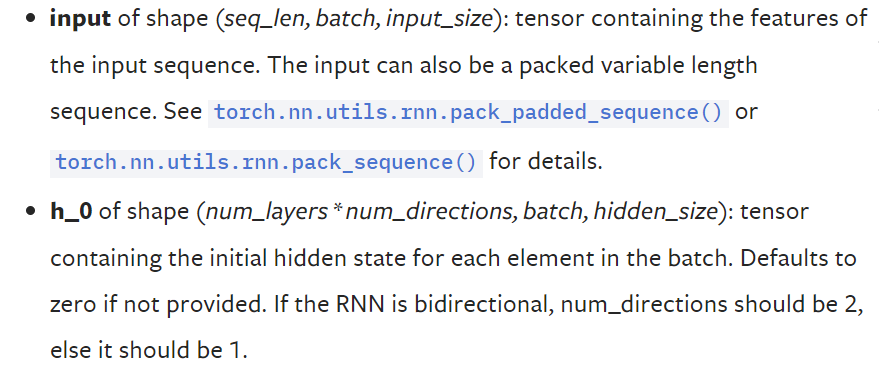

Following this, this is my input and hidden shape

My input tensor looks like this : [a1 b1 c1 d1 e1

a2 b2 c2 d2 e2

a3 b3 c3 d3 e3] (not showing the one hot encoding here)

So, when a forward pass is happening, does the hidden state grow from a1 to a2 to a3? How is it handled internally by PyTorch?

The corresponding target tensor (used after flattening, of course) looks like this

[a2 b2 c2 d2 e2

a3 b3 c3 d3 e3

a4 b4 c4 d4 e4]

The forward pass creates 15 values of losses. As far as I understand, we should accumulate the loss from a1 to a2 and a2 to a3 and a3 to a4.

So, instead of computing loss from output and target of size 15, shall I compute the loss for size 3, add them up, do loss.backwards() for all the 5 inputs?