Hello everyone,

Recently my lab has received two new rtx3090 gpus and I decided to try them out with a resnet50 model I’m using these days. Curiously I found that there was nearly a 50% increase in VRAM compared to when I used two RTX 2080Ti with a Titan rtx nvlink bridge.

The project setup: my dataset is comprised of 640*640 images, training batch size: 20 and testing batch size: 120. I’m using the standard torchvision resnet50 model and wrapping it with nn.DataParallel (I haven’t started setting up DistributedDataParrallel yet).

I’m also using torch.backends.cudnn.benchmark = True and the optimizer is the classic optim.SGD.

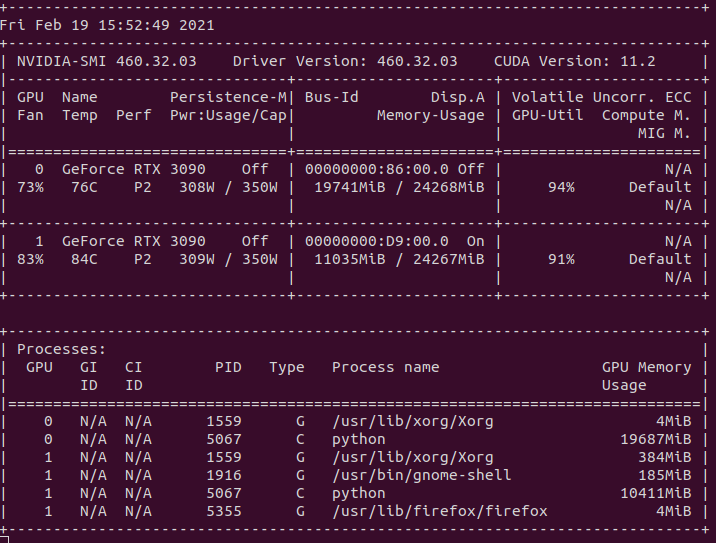

When I was using two rtx 2080Ti, I would use around 10GiB on each graphic card, whereas now with the two rtx3090 I have 19.7 GiB on the first gpu and 11GiB on the second. Here is a screenshot of the nvidia-smi command:

Why do we have this increase in VRAM usage with the rtx3090?

Thanks in advance