Hi there, i have a new rtx4090 that works for anything else. but for pytorch it is as slow as my old gtx1070. the comparison is weird, because it should be many times faster. but they run same test script in more or less same time. also, the 4090 is on a clean new machine.

i have tries different cuda / pytorch versions. nothing speeds it up.

any tips how i can get the 4090 to work with pytorch?

is on windows

Did you profile your workload to check where the bottleneck is? E.g. are both GPUs running into a data loading bottleneck and the faster compute won’t help as it wasn’t slowing down your old system?

I am using a small dataset to run the test. it is 70000 lines with 7 columns as features. the loss and predictions / gradients show it is iterating as expected on both machines. but the speed is weirdly same / faster on old machine.

not sure what other workload check i can do? it seems on surface there is some installation issue. although i installed same pytorch on visual studio on both machines. i also installed cuda directly from nvidia, but i think that was not even required.

Why do you think it’s an installation issue and did you profile the code using e.g. Nsight Systems?

I wouldn’t speculate but just check the timelines to see where the bottleneck is.

thanks. i never used that one. will read and try.

reason i think is installation issue is because the hardware difference between the two machines is so big; it cant be they have same speed. old one is i5 with gtx1070 and new one is i9 with rtx4090. any other code or data manipulation is much faster on the new machine. but you are right, maybe speed of gpu was not a problem from the beginning.

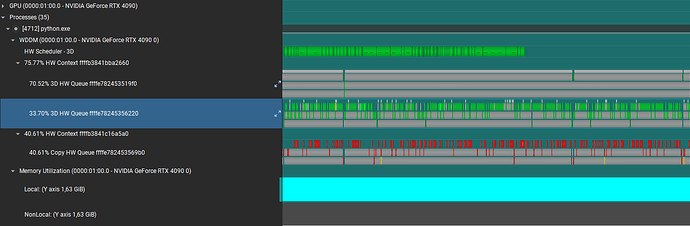

so this is how it looks when i run a report on it. not having any Nsight experience is not helping… can you see any issue here maybe? thanks for the help.

sorry to reply to my thread. i see in troubleshooting forum some people suggest installing CUDNN. however, my old machine did not have that installed. could that be needed for 4090?

The PyTorch binaries already ship with cuDNN and your locally installed CUDA toolkit (and cuDNN) won’t be used unless you build PyTorch from source.

Could you annotate your script as described here and check the iteration time between your new and old machine?

thanks for taking the time. i tried implement that code, but not knowing how nsight works and interpreting anything out of it makes it too time consuming. i was thinking maybe someone else experienced same performance issue with rtx4090. if anyone knows a reason that this hardware not perform as it should, please post in this thread.

i will try test on tensorflow and see if the speed is better.

so, i tried testing with tensorflow. apparently tensorflow stopped supporting windows…

so it reverted to using cpu… not a real test ![]()

if anyone has tips on what the root cause can be in installation / code please throw any ideas

hey,

Our group is also using a few RTX 4090, and they are working pretty well as of now, and no one has had any problems with performance. Maybe the dataset is too small to see performance differences in your case. I would recommend benchmarking with slightly large datasets.

hey there!

Thanks, indeed one of the problems was the dataset size.

But in setup i made a mistake: I was using the pycharm built-in python interpreter that did not have the path variable correctly set. also XFORMERS_AVAILABLE was True and system / graphics/hardware acceleration for gpu was on.

i installed a clean python with the path set. this already improved the speed. and set xformers false., hardware acc = off.

then i increased batch size.

also increased sequence size to test.

in the end, with these changes rtx 4090 is 5x faster than the gtx1070.

of course i will not keep batch and sequence fixed.

but conclusion: pytorch does work with rtx4090. you just need not be an idiot like me ![]()

Thanks for the update and I wouldn’t be to harsh on yourself, as indeed interpreting performance profiles might not be trivial.

I’m still unsure how the Python interpreter was related to the issue, but in any case it’s good to hear you are seeing the expected speedup now.

It’s indeed odd that your RTX 4090 is performing similarly to the GTX 1070, especially given the expected significant hardware improvements. Here are a few troubleshooting steps to consider:

-

Check CUDA Compatibility:

- Ensure you’ve installed the latest NVIDIA drivers compatible with your GPU.

- Verify that the CUDA toolkit version you’ve installed is compatible with the PyTorch version you’re using. You can consult the PyTorch website to see the recommended CUDA version for each PyTorch release.

-

Utilization Check:

- Monitor the GPU utilization using NVIDIA’s System Management Interface (

nvidia-smi) while running your PyTorch script. This will let you see if PyTorch is indeed using the RTX 4090. A low utilization might indicate that the GPU isn’t being fully engaged.

- Monitor the GPU utilization using NVIDIA’s System Management Interface (

-

Memory Bandwidth:

- The performance might be bottlenecked by memory bandwidth, especially if your model is large. You can check the memory usage also via

nvidia-smi.

- The performance might be bottlenecked by memory bandwidth, especially if your model is large. You can check the memory usage also via

-

Tensor Cores:

- The newer GPUs like the RTX 4090 have specialized Tensor Cores which can dramatically speed up certain operations. Ensure your model and operations are optimized to take advantage of these. For example, using

float16(or “half precision”) can leverage Tensor Cores more effectively.

- The newer GPUs like the RTX 4090 have specialized Tensor Cores which can dramatically speed up certain operations. Ensure your model and operations are optimized to take advantage of these. For example, using

-

Framework Configuration:

- Check if PyTorch is running in CUDA mode:

print(torch.cuda.is_available())should returnTrue. - Explicitly move your model and data to the CUDA device in your script using

.cuda()or.to('cuda:0').

- Check if PyTorch is running in CUDA mode:

-

External Factors:

- Ensure there aren’t other processes that heavily utilize the GPU while you’re running your PyTorch script.

- On Windows, ensure that the GPU is set to “Maximum Performance” in the NVIDIA Control Panel.

-

Test Script:

- If possible, run a benchmark script or a standard model like ResNet to compare the performance between the two GPUs. This helps in isolating if the problem is with your specific script or the setup in general.

-

Software Conflicts:

- Since you mentioned the RTX 4090 is on a new clean machine, ensure there aren’t any conflicting software or drivers. Sometimes, having multiple versions of CUDA or cuDNN can cause issues.

If after trying these steps you’re still facing the same issue, it could be a more intricate problem that might need deeper investigation.

It doesn’t as was already clarified. Did you read the topic or is this a ChatGPT generated post?

Yeah, I’m sorry. I didn’t read the replies. And it’s true most of my response was generated by GPT-4. I was just trying to help. Sorry, friends. ![]()

I’ll read more next time. I just instinctively thought the thread was new due to receiving an email about it just this morning. Sorry again though…

I am having a different kind of issue but with RTX4090 on ProArt Z790-CREATOR WIFI with Intel(R) Core™ i9-14900KF and pytorch.

lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 23.10

Release: 23.10

Codename: mantic

pip freeze

filelock==3.13.3

fsspec==2024.3.1

Jinja2==3.1.3

MarkupSafe==2.1.5

mpmath==1.3.0

networkx==3.2.1

numpy==1.26.4

nvidia-cublas-cu12==12.1.3.1

nvidia-cuda-cupti-cu12==12.1.105

nvidia-cuda-nvrtc-cu12==12.1.105

nvidia-cuda-runtime-cu12==12.1.105

nvidia-cudnn-cu12==8.9.2.26

nvidia-cufft-cu12==11.0.2.54

nvidia-curand-cu12==10.3.2.106

nvidia-cusolver-cu12==11.4.5.107

nvidia-cusparse-cu12==12.1.0.106

nvidia-nccl-cu12==2.19.3

nvidia-nvjitlink-cu12==12.4.127

nvidia-nvtx-cu12==12.1.105

pillow==10.3.0

sympy==1.12

torch==2.2.2

torchvision==0.17.2

triton==2.2.0

typing_extensions==4.10.0

nvidia driver version 535 / 545/ 550

code - official mnist examples examples/mnist/main.py at main · pytorch/examples · GitHub

error I get in /var/log/kern.log:

kernel tried to execute NX-protected page - exploit attempt?

the error happens within few minutes of the training

I tried reinstalling the whole system from scratch few times. upgraded BIOS, three different driver versions. nothing helped.

any suggestion what direction to dig ? Maybe @ptrblck ? Thank you.