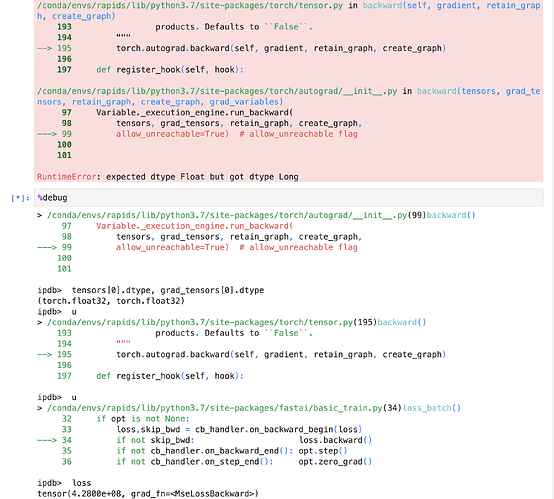

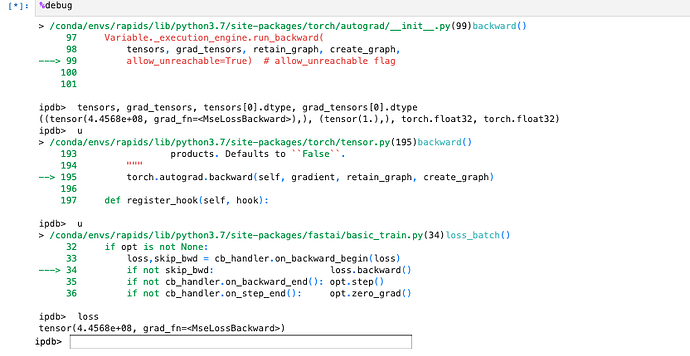

Trying to run the Variable._execution_engine.run_backward call and I am getting an error:

RuntimeError: expected dtype Float but got dtype Long. Even though both tensors are float32, I have attached a picture below showing the output from the debugger along with the error.

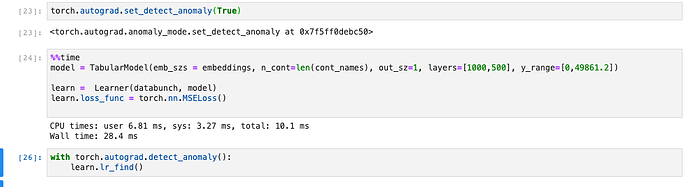

We are using the torch.nn.MSELoss function and we are getting similar results with the torch.nn.SmoothL1Loss function. Any ideas on what the problem is or what next steps should be?

We are running pytorch version 1.4.0.dev20191111.