I have this code which runs on CPU(if I remove kCUDA device placement).

torch::NoGradGuard no_grad; torch::jit::script::Module module; try { // Deserialize the ScriptModule from a file using torch::jit::load(). module = torch::jit::load(path, torch::kCUDA); } catch (const c10::Error& e) { std::cerr << "error loading the model\n"; return -1; } std::cout << "ok\n"; torch::Tensor tensor = torch::ones({ 1, 3, 384, 640 }).to(at::kCUDA); // Execute the model and turn its output into a tensor. auto output = module.forward({ tensor }).toTuple();

But with

torch::kCUDA

forward pass fails inside get_method in module.h:

IValue forward(std::vector inputs) {

return get_method(“forward”)(std::move(inputs));

}

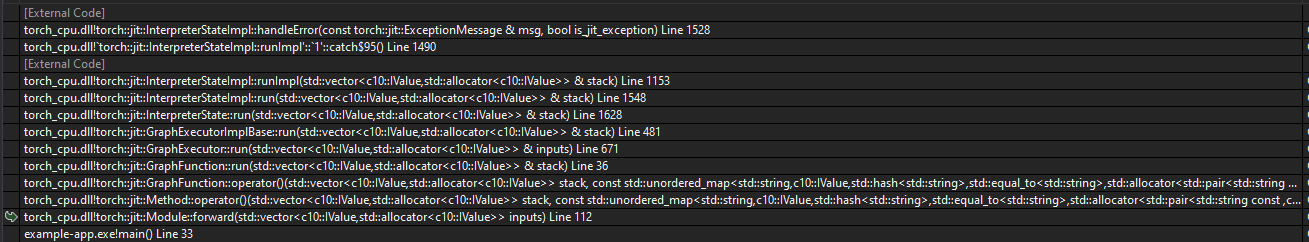

Here is call stack:

What could be the problem here?

CUDA version 10.1