Hi there, I have a case (in Q&A system) where I need to run multiple LSTM in parallel.

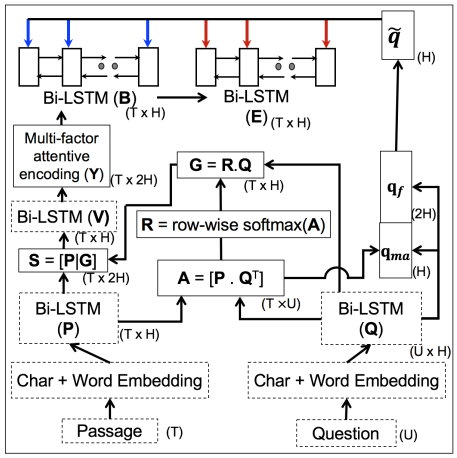

At below architecture we can see that the emedding for both passage and question are both able to run independently, my question would be how to run both in parallel?

Let say I have the following code

class Encoder(nn.Module):

def __init__(self):

self.passage_bilstm = nn.LSTM(300, 300, 1, bidirectional=True)

self.question_bilstm = nn.LSTM(300, 300, 1, bidirectional=True)

...

def forward(self, passage, question):

emb_passage = self.embedding_passage(passage)

emb_question = self.embedding_question(question)

# If I run as the following, I assume it may be executed sequentially right?

# If my assumption is correct, do we have a way to run these in parallel so that

# question_bilstm doesn't wait passage_bilstm?

self.passage_bilstm(emb_passage)

self.question_bilstm(emb_question)

Or my assumption is wrong?