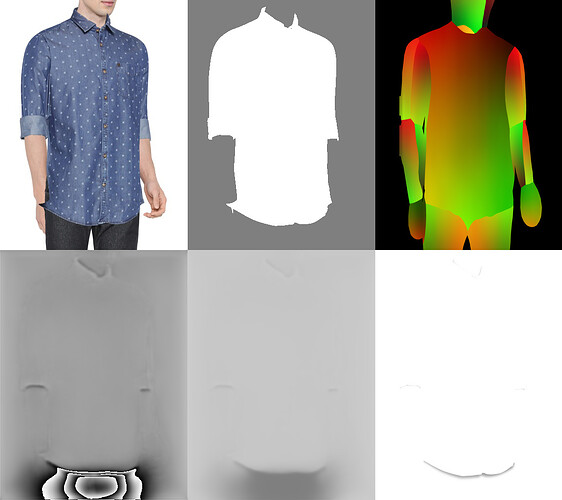

I am using UNET architecture with PyTorch where I have 7 input channels, 3 for cloth, 1 for mask of the same cloth and 3 for densepose of the body.

- The top row images are the inputs, 3 channel cloth, 1 channel mask of the same and 3 channel body dense pose from left to right.

- The bottom row rightmost image is my ground truth which should be predicted as it is dependent on the above 3 inputs.

- The bottom row leftmost image is the output obtained after concatenating the 3 inputs (7 channels) in a UNet model in PyTorch. This is where I get concentric circles at random spots for some reason.

- The bottom row middle image is the sigmoid of the output and because of the concentric circles there is dark spot on the same and the loss never converges with respect to the ground truth.

I tried changing the input to blank white image, changing the input channels to just a single white image, changing the ground truth and with smaller data but I always get these concentric circles. There was a weight initialization missing in my architecture but now that has been done but even after trying everything, can’t get rid of these concentric circles. Can somebody suggest me the reason why these are occurring or what other parameters I can change?