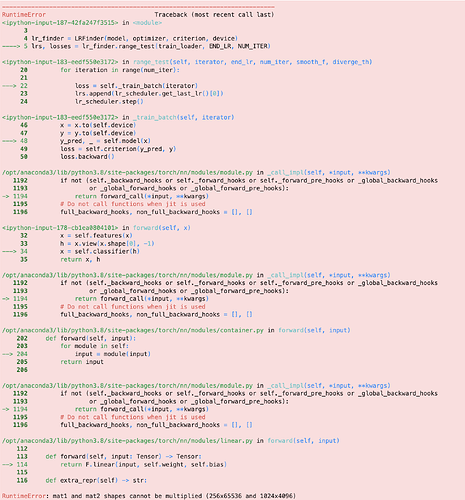

I am trying to use AlexNet to classify spectrogram images generated for 3s audio segments. I am aware that the input image to AlexNet must be 224x224 and have transformed the train and test datasets accordingly. I am encountering the following error: mat1 and mat2 shapes cannot be multiplied (256x65536 and 1024x4096 - see attached image for full error message) and I am not entirely sure why. Can anyone help me figure out where I am going wrong?

Transform data

data_transform_train = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(256),

transforms.ToTensor(),

transforms.Normalize(norm_mean_train, norm_std_train),

])

Create dataloaders

train_size = int(len(train_data_df))

test_size = int(len(test_data_df))

ins_dataset_train = Audio(

df=train_data_df[:train_size],

transform=data_transform_train,

)

ins_dataset_test = Audio(

df=test_data_df[:test_size],

transform=data_transform_test,

)

train_loader = torch.utils.data.DataLoader(

ins_dataset_train,

batch_size=256,

shuffle=True

)

test_loader = torch.utils.data.DataLoader(

ins_dataset_test,

batch_size=256,

shuffle=True

)

AlexNet model

class AlexNet(nn.Module):

def __init__(self, output_dim):

super().__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, 3, 2, 1), # in_channels, out_channels, kernel_size, stride, padding

nn.MaxPool2d(2), # kernel_size

nn.ReLU(inplace=True),

nn.Conv2d(64, 192, 3, padding=1),

nn.MaxPool2d(2),

nn.ReLU(inplace=True),

nn.Conv2d(192, 384, 3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, 3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, 3, padding=1),

nn.MaxPool2d(2),

nn.ReLU(inplace=True)

)

self.classifier = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(256 * 2 * 2, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, output_dim),

)

def forward(self, x):

x = self.features(x)

h = x.view(x.shape[0], -1)

x = self.classifier(h)

return x, h

output_dim = 2

model = AlexNet(output_dim)

def count_parameters(model):

return sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f'The model has {count_parameters(model):,} trainable parameters')

def initialize_parameters(m):

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight.data, nonlinearity='relu')

nn.init.constant_(m.bias.data, 0)

elif isinstance(m, nn.Linear):

nn.init.xavier_normal_(m.weight.data, gain=nn.init.calculate_gain('relu'))

nn.init.constant_(m.bias.data, 0)

model.apply(initialize_parameters)

Learning rate finder

class LRFinder:

def __init__(self, model, optimizer, criterion, device):

self.optimizer = optimizer

self.model = model

self.criterion = criterion

self.device = device

torch.save(model.state_dict(), 'init_params.pt')

def range_test(self, iterator, end_lr=10, num_iter=100, smooth_f=0.05, diverge_th=5):

lrs = []

losses = []

best_loss = float('inf')

lr_scheduler = ExponentialLR(self.optimizer, end_lr, num_iter)

iterator = IteratorWrapper(iterator)

for iteration in range(num_iter):

loss = self._train_batch(iterator)

lrs.append(lr_scheduler.get_last_lr()[0])

lr_scheduler.step()

if iteration > 0:

loss = smooth_f * loss + (1 - smooth_f) * losses[-1]

if loss < best_loss:

best_loss = loss

losses.append(loss)

if loss > diverge_th * best_loss:

print("Stopping early, the loss has diverged")

break

model.load_state_dict(torch.load('init_params.pt'))

return lrs, losses

def _train_batch(self, iterator):

self.model.train()

self.optimizer.zero_grad()

x, y = iterator.get_batch()

x = x.to(self.device)

y = y.to(self.device)

y_pred, _ = self.model(x)

loss = self.criterion(y_pred, y)

loss.backward()

self.optimizer.step()

return loss.item()

from torch.optim.lr_scheduler import _LRScheduler

class ExponentialLR(_LRScheduler):

def __init__(self, optimizer, end_lr, num_iter, last_epoch=-1):

self.end_lr = end_lr

self.num_iter = num_iter

super(ExponentialLR, self).__init__(optimizer, last_epoch)

def get_lr(self):

curr_iter = self.last_epoch

r = curr_iter / self.num_iter

return [base_lr * (self.end_lr / base_lr) ** r

for base_lr in self.base_lrs]

class IteratorWrapper:

def __init__(self, iterator):

self.iterator = iterator

self._iterator = iter(iterator)

def __next__(self):

try:

inputs, labels = next(self._iterator)

except StopIteration:

self._iterator = iter(self.iterator)

inputs, labels, *_ = next(self._iterator)

return inputs, labels

def get_batch(self):

return next(self)

start_learning_rate = 1e-7

optimizer = optim.Adam(model.parameters(), lr=start_learning_rate)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

criterion = nn.CrossEntropyLoss()

model = model.to(device)

criterion = criterion.to(device)

END_LR = 10

NUM_ITER = 100

lr_finder = LRFinder(model, optimizer, criterion, device)

lrs, losses = lr_finder.range_test(train_loader, END_LR, NUM_ITER)