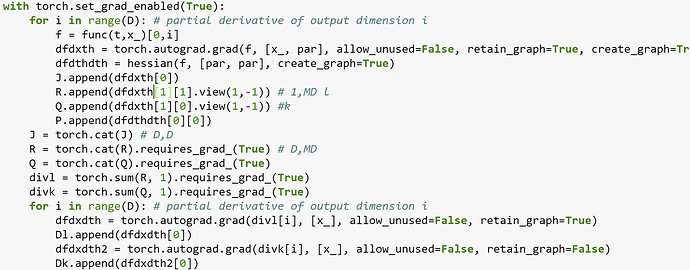

When I run this I keep getting the error RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn when computing dfdxdth2 towards the bottom. If I swap the order of dfdxdth and dfdxdth2, then the error appears on dfdxdth. So I am assuming somehow my graph is getting screwed up during the backward pass of which ever (dfdxdth or dfdxdth2) comes first. Also in the last loop in the screenshot, if I were to remove either dfdxdth or dfdxdth2, the loop would fail on the second pass. This makes me think no matter what the first autograd computed in the loop causes the error.

Sorry about my bad coding etiquette as well