I am trying to quantize the model of image network. and I faced the error.

For the call the function below

dummy_input = torch.randn(1, 3, 320, 320).cpu()

script_model = torch.jit.trace(net, dummy_input)

the tensor operation of sum shows the Exception

norm = x.sum(dim=1, keepdim=True).sqrt()

RuntimeError: Could not run ‘aten::empty.memory_format’ with arguments from the ‘QuantizedCPU’ backend. ‘aten::empty.memory_format’ is only available for these backends: [CPU, CUDA, MkldnnCPU, SparseCPU, SparseCUDA, BackendSelect, Autograd, Profiler, Tracer].

Is there any way bypassing this error?

1 Like

jerryzh168

October 8, 2020, 10:10pm

2

it’s because quantized::sum is not supported, can you put dequant/quant around the sum op?

2 Likes

hi, how to put dequant/quant around the sum op?? sorry,i am new

There is an example here: https://pytorch.org/docs/stable/quantization.html - if you search that page for torch.quantization.QuantStub() and torch.quantization.DeQuantStub(), that should help.

1 Like

escorciav

February 20, 2023, 2:09pm

6

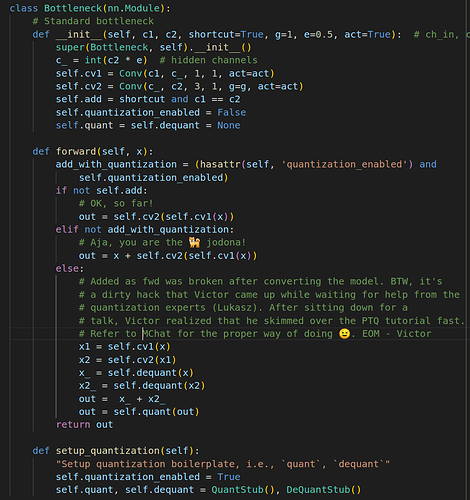

I did the dequant/quant biz, and it works (as it stopped complaining).

However, I’m not sure that’s the proper way. I believe that the PTQ tutorial (InvertedResiual, sum) has the “proper” way of doing it. Let me know

@ptrblck do you know about quantization?

jcaip

February 22, 2023, 4:33pm

7

Hi Victor,

Can you link the tutorial that you are referring to?

escorciav

February 22, 2023, 5:31pm

8

Here you go

https://pytorch.org/tutorials/advanced/static_quantization_tutorial.html#post-training-static-quantization

Out of curiosity what kind of model are you trying to quantize?

jcaip

February 22, 2023, 6:28pm

9

I am the current pytorch quantization oncall

torch.ao.quantization.convert will add in Quant/Dequant pairs for you, but not all ops are supported for quantized inputs, hence the need to manually add in dequant to run your op in fp32.

Also, if you have additional questions it would be better if you created a new question.

1 Like