I am trying to run quantization on a model that I have to try and make the performance much faster. The model I am using to test this out is the pretrained wideres101(I have noted below how you can call it). The code is running on CPU. I put in a couple breakpoints, outputting the model and model size before and after quantization. Before quantization, the model is 510MB and after quantization it is down to 128MB. It seems like the quantization is being done as it should. The problem arises when the quantized model is called again later in the code when running the tester.

How I call the model:

model = torchvision.models.wide_resnet101_2(pretrained=True)

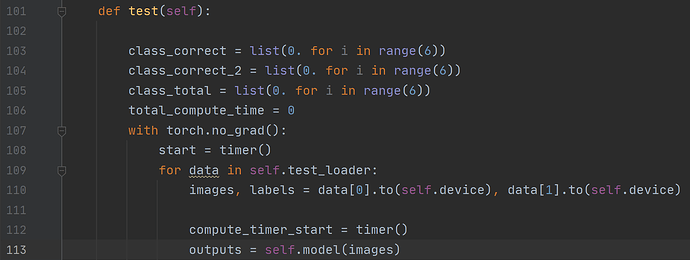

The line 113 is where the code is erroring out at:

This is the error I get:

File “C:\Users\NishchintUpadhyaya\anaconda3\envs\SoilMoist\lib\site-packages\torch\nn\intrinsic\quantized\modules\conv_relu.py”, line 69, in forward

return torch.ops.quantized.conv2d_relu(

RuntimeError: Could not run ‘quantized::conv2d_relu.new’ with arguments from the ‘CPU’ backend. ‘quantized::conv2d_relu.new’ is only available for these backends: [QuantizedCPU, BackendSelect, Named, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, Tracer, Autocast, Batched, VmapMode].

Any suggestions?