I’m a student.

I gets errors when my model runs to code ‘loss.backward()’. I display the details of error.

ERROR 1:

File “F:/CODE/BHC/main.py”, line 175, in

p, r, f1 = main(configs, fold_id)

File “F:/CODE/BHC/main.py”, line 73, in main

loss.backward()

File “F:\Common_V\lib\site-packages\torch\tensor.py”, line 198, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File “F:\Common_V\lib\site-packages\torch\autograd_init_.py”, line 100, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA error: an illegal memory access was encountered (launch_kernel at C:/w/b/windows/pytorch/aten/src\ATen/native/cuda/CUDALoops.cuh:217)

(no backtrace available)

ERROR 2:

Traceback (most recent call last):

File “F:/CODE/BHC/main.py”, line 175, in

p, r, f1 = main(configs, fold_id)

File “F:/CODE/BHC/main.py”, line 73, in main

loss.backward()

File “F:\Common_V\lib\site-packages\torch\tensor.py”, line 198, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File “F:\Common_V\lib\site-packages\torch\autograd_init_.py”, line 100, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA error: CUBLAS_STATUS_EXECUTION_FAILED when calling cublasSgemm( handle, opa, opb, m, n, k, &alpha, a, lda, b, ldb, &beta, c, ldc) (gemm at …\aten\src\ATen\cuda\CUDABlas.cpp:165)

(no backtrace available)

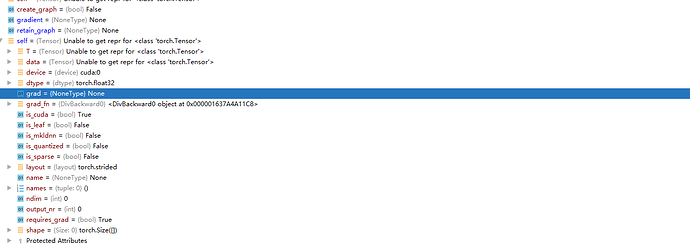

I set breakpoint at the line of ‘loss.backward()’ and find a problem that may led to occurrence of errors,

but i don’t know the reason.

additionally, i try to some ways to fix this bug, such as, set ‘torch.cuda.set_device(0)’ , set ‘torch.backends.cudnn.enabled=False’, or decrease the batch size, the batch size is one.

but all these ways don’t work.

I’m confused and sad, I hope somebody could help me. I will be very appreciated for your reply.