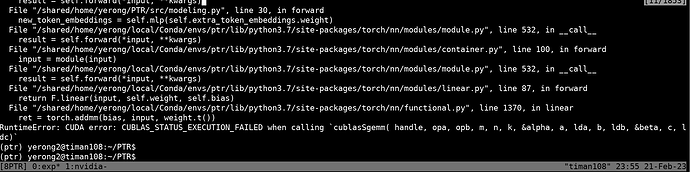

I have a same set of code that runs in RTX 1080 but does not work on RTX A5000, with error ** RuntimeError: CUDA error: CUBLAS_STATUS_EXECUTION_FAILED when calling cublasSgemm( handle, opa, opb, m, n, k, &alpha, a, lda, b, ldb, &beta, c, ldc) **

I am using following ptr.yml with

name: ptr

channels:

- nvidia/label/cuda-11.5.1

- defaults

dependencies:

- _libgcc_mutex=0.1=main

- _openmp_mutex=5.1=1_gnu

- ca-certificates=2023.01.10=h06a4308_0

- certifi=2022.12.7=py37h06a4308_0

- cuda=11.5.1=0

- cuda-cccl=11.5.62=0

- cuda-command-line-tools=11.5.1=0

- cuda-compiler=11.5.1=0

- cuda-cudart=11.5.117=h7e867a7_0

- cuda-cudart-dev=11.5.117=h91b5d7a_0

- cuda-cuobjdump=11.5.119=h32764b9_0

- cuda-cupti=11.5.114=h2757d8a_0

- cuda-cuxxfilt=11.5.119=h3f39129_0

- cuda-driver-dev=11.5.117=0

- cuda-gdb=11.5.114=h8765814_0

- cuda-libraries=11.5.1=0

- cuda-libraries-dev=11.5.1=0

- cuda-memcheck=11.5.114=h765d031_0

- cuda-nsight=11.5.114=0

- cuda-nsight-compute=11.5.1=0

- cuda-nvcc=11.5.119=h2e31d95_0

- cuda-nvdisasm=11.5.119=he465173_0

- cuda-nvml-dev=11.5.50=h511b398_0

- cuda-nvprof=11.5.114=hd1b9a7f_0

- cuda-nvprune=11.5.119=ha53ebc3_0

- cuda-nvrtc=11.5.119=h411d788_0

- cuda-nvrtc-dev=11.5.119=h3fe8e16_0

- cuda-nvtx=11.5.114=ha1eacfd_0

- cuda-nvvp=11.5.114=h233c720_0

- cuda-runtime=11.5.1=0

- cuda-samples=11.5.56=hf1e648b_0

- cuda-sanitizer-api=11.5.114=h781f4d3_0

- cuda-toolkit=11.5.1=0

- cuda-tools=11.5.1=0

- cuda-visual-tools=11.5.1=0

- gds-tools=1.1.1.25=0

- ld_impl_linux-64=2.38=h1181459_1

- libcublas=11.7.4.6=hd52c9d2_0

- libcublas-dev=11.7.4.6=h9ea41a3_0

- libcufft=10.6.0.107=hd5a0538_0

- libcufft-dev=10.6.0.107=hb86e5fa_0

- libcufile=1.1.1.25=0

- libcufile-dev=1.1.1.25=0

- libcurand=10.2.7.107=h449470a_0

- libcurand-dev=10.2.7.107=hd5b7b69_0

- libcusolver=11.3.2.107=hc875929_0

- libcusolver-dev=11.3.2.107=h78cb71c_0

- libcusparse=11.7.0.107=hf21abff_0

- libcusparse-dev=11.7.0.107=h338262b_0

- libffi=3.4.2=h6a678d5_6

- libgcc-ng=11.2.0=h1234567_1

- libgomp=11.2.0=h1234567_1

- libnpp=11.5.1.107=hb6e5806_0

- libnpp-dev=11.5.1.107=he6b01ee_0

- libnvjpeg=11.5.4.107=h31e24ca_0

- libnvjpeg-dev=11.5.4.107=h6455901_0

- libstdcxx-ng=11.2.0=h1234567_1

- ncurses=6.4=h6a678d5_0

- nsight-compute=2021.3.1.4=0

- openssl=1.1.1t=h7f8727e_0

- pip=22.3.1=py37h06a4308_0

- python=3.7.16=h7a1cb2a_0

- readline=8.2=h5eee18b_0

- setuptools=65.6.3=py37h06a4308_0

- sqlite=3.40.1=h5082296_0

- tk=8.6.12=h1ccaba5_0

- wheel=0.38.4=py37h06a4308_0

- xz=5.2.10=h5eee18b_1

- zlib=1.2.13=h5eee18b_0

- pip:

- charset-normalizer==3.0.1

- click==8.1.3

- filelock==3.9.0

- idna==3.4

- importlib-metadata==6.0.0

- joblib==1.2.0

- numpy==1.18.0

- packaging==23.0

- regex==2022.10.31

- requests==2.28.2

- sacremoses==0.0.53

- scikit-learn==0.22.1

- scipy==1.4.1

- six==1.16.0

- tokenizers==0.9.4

- torch==1.4.0

- tqdm==4.41.1

- transformers==4.0.0

- typing-extensions==4.5.0

- urllib3==1.26.14

- zipp==3.14.0

with A5000:

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.86.01 Driver Version: 515.86.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A5000 Off | 00000000:01:00.0 Off | Off |

| 30% 27C P0 58W / 230W | 0MiB / 24564MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA RTX A5000 Off | 00000000:41:00.0 Off | Off |

| 30% 26C P0 62W / 230W | 0MiB / 24564MiB | 0% Default |

Specifically I am using torch 1.4.0 and cuda-toolkit 11.5.1.

Anyone knows how to fix this?