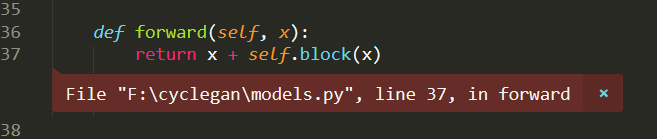

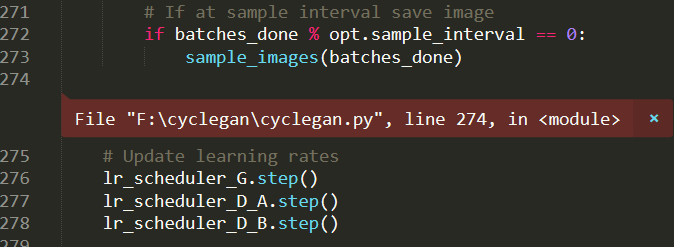

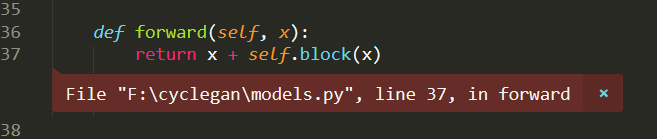

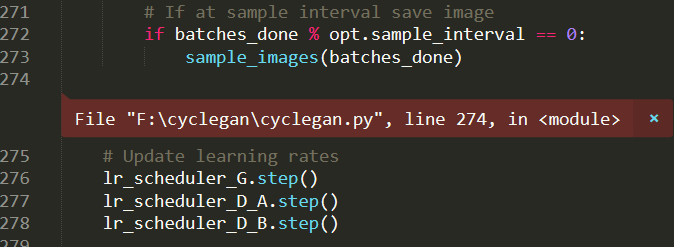

The error is reported as follows. My batchsize is set to 1. What is the problem and how to modify it?

The error is reported as follows. My batchsize is set to 1. What is the problem and how to modify it?

4GB total memory might not be enough to train CycleGAN, but I haven’t verified it.

Could you check, if your code is working on Colab?

If I’m not mistaken, they are providing GPUs with more memory.

PS: As always, it’s better to post code instead of pictures.

Yes they do, normally they provide you about 12 or 13 gigs, if you can train with that only then no problem.

Otherwise you can increase your ram there upto 25 gigs. But there’s no option to manually do that, you need to run below script -

a = []

while(1):

a.append(‘gaufbsiznaoejwuiaaoke be uJwbdiajajdhziajjdbsians’)

And then wait till session crash prompt occurs, you’ll be asked “If you would like to switch to a high-RAM runtime” click yes and there you go.

But my computer’s RAM is 8GB, why is 4GB displayed and used there?

Your GPU has apparently 4GB of memory.

If you give me the product name of your GPU, I can double check it.

pytorch is using your “GPU” memory instead of your RAM memory, try reduce the batch size

Thank you. Batchsize is already 1. It should be another reason.

Slightly reduce input image size, might help

The 940MX has a max. memory of 4GB.

Thank you. So can I only run this program on the cloud? Is this error related to "With torch.no_grad () : " ?

with torch.no_grad() will save some memory during evaluation and testing, but you won’t be able to train the model.

4GB won’t be enough for a lot of common models, so you would either have to use a cloud runtime (e.g. Colab, Kaggle, or any cloud provider) or buy a bigger device.

Thank you very much!

Can I run down if I only use the CPU to run the program?

That might be possible, but also 8GB might not be enough for a full workload.

If you keep the batch size low, it could fit without using the swap.

The CPU run will be slower than running it on the GPU. However, if you run out of your system RAM and use the swap, you will see an even bigger performance hit.