I was trying to run the extractive summarizer of the BERTSUM program(https://github.com/nlpyang/PreSumm/tree/master/src) in test mode with the following command:

python train.py -task ext -mode test -batch_size 3000 -test_batch_size 500 -bert_data_path C:\Users\hp\Downloads\PreSumm-master\PreSumm-master\bert_data -log_file ../logs/val_abs_bert_cnndm -model_path C:\Users\hp\Downloads\bertext_cnndm_transformer -test_from C:\Users\hp\Downloads\bertext_cnndm_transformer\model_1.pt -sep_optim true -use_interval true -visible_gpus 1 -max_pos 512 -max_length 200 -alpha 0.95 -min_length 50 -result_path ../logs/abs_bert_cnndm

Here is the error log:

[2020-01-13 21:03:01,681 INFO] loading weights file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-pytorch_model.bin from cache at ../temp\aa1ef1aede4482d0dbcd4d52baad8ae300e60902e88fcb0bebdec09afd232066.36ca03ab34a1a5d5fa7bc3d03d55c4fa650fed07220e2eeebc06ce58d0e9a157

driver version : 10020

THCudaCheck FAIL file=..\aten\src\THC\THCGeneral.cpp line=50 error=100 : no CUDA-capable device is detected

Traceback (most recent call last):

File "train.py", line 156, in <module>

test_ext(args, device_id, cp, step)

File "C:\Users\hp\Downloads\PreSumm-master\PreSumm-master\src\train_extractive.py", line 190, in test_ext

model = ExtSummarizer(args, device, checkpoint)

File "C:\Users\hp\Downloads\PreSumm-master\PreSumm-master\src\models\model_builder.py", line 168, in __init__

self.to(device)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 426, in to

return self._apply(convert)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 202, in _apply

module._apply(fn)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 202, in _apply

module._apply(fn)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 202, in _apply

module._apply(fn)

[Previous line repeated 1 more time]

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 224, in _apply

param_applied = fn(param)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 424, in convert

return t.to(device, dtype if t.is_floating_point() else None, non_blocking)

File "C:\Users\hp\Anaconda3\lib\site-packages\torch\cuda\__init__.py", line 194, in _lazy_init

torch._C._cuda_init()

RuntimeError: cuda runtime error (100) : no CUDA-capable device is detected at ..\aten\src\THC\THCGeneral.cpp:50

I am sure that I have a CUDA-enabled GPU, I made sure by checking the list on NVIDIA. This is an NVIDIA GeForce GTX 950M. I have also used my GPU for deep learning projects with CUDA before. I have installed CUDA and cudNN following these instructions, thinking that could be the problem:https://www.easy-tensorflow.com/tf-tutorials/install/cuda-cudnn(latest versions, CUDA 10.2). I also tried adding os.environ[‘CUDA_VISIBLE_DEVICES’]=‘0’ in train.py(as this worked for people facing the same kind of error from help posts online). But still the error persists.

I’d really appreciate if someone could help me figure this out.

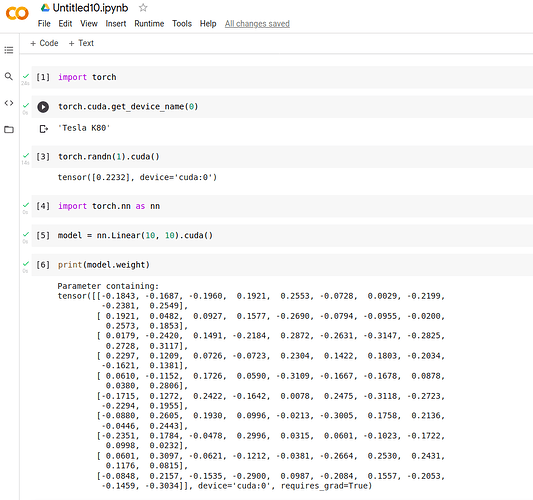

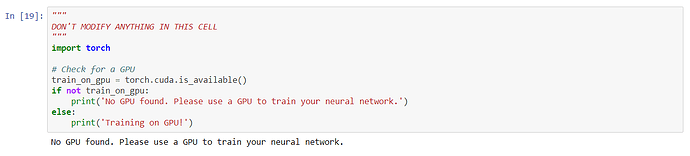

import torch

import torch