I’m trying to use the model output as indexes to create a 3d point cloud. Because in order to calculate the loss I want to convolve it.

code:

for epoch in range(num_epochs):

optimizer.zero_grad() # Zero the gradients

output = model(masked_coords)

points_3d = gen_3d(200,200,35, mask, output)

psf_output = conv_3D(points_3d,IPSF)

loss = loss_fn(psf_output, target)

loss. Backward() # Backpropagation

optimizer.step() # Update weights

This is my gen_3d function:

def gen_3d(x,y,z, mask, depths):

pc = torch.zeros(x,y,z)

_pc = pc.view(-1,z)

m = mask.flatten()

d = (depths*z).int().flatten()

_pc[m, d] += 1

return torch.nn.functional.pad(pc, (0, 0, 10, 10, 10, 10))

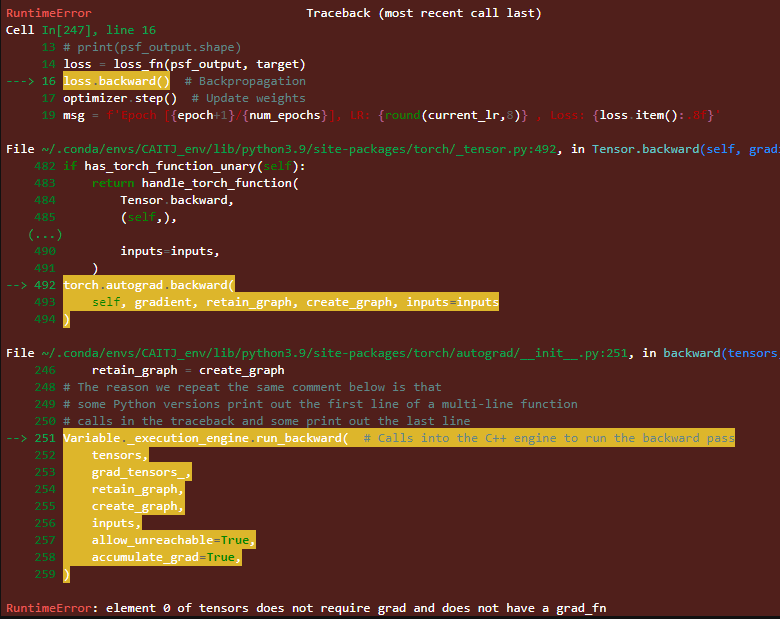

Error:

seems like the process is not differentiable. Is there a way to solve this?