am new in pytorch.

I got this error when i was try to train my model.Any one can help me please

Here is my code

import numpy as np

import pandas as pd

import os

import torchvision

from torchvision import transforms, datasets, models

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch import optim, cuda

from torch.utils.data import sampler, DataLoader

import matplotlib.pyplot as plt

import seaborn as sn

import torch.optim as optim

import tqdm as tqdm

import cv2

from timeit import default_timer as timer

from PIL import Image

image_transforms = {

'train':

transforms.Compose([

transforms.RandomResizedCrop(size=256, scale=(0.8, 1.0)),

transforms.RandomRotation(degrees=15),

transforms.ColorJitter(),

transforms.RandomHorizontalFlip(),

transforms.CenterCrop(size=224), # Image net standards

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],

[0.229, 0.224, 0.225]) # Imagenet standards

]),

'val':

transforms.Compose([

transforms.Resize(size=256),

transforms.CenterCrop(size=224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'test':

transforms.Compose([

transforms.Resize(size=256),

transforms.CenterCrop(size=224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data = {

‘train’:

datasets.ImageFolder(root=traindir, transform=image_transforms[‘train’]),

‘val’:

datasets.ImageFolder(root=validdir, transform=image_transforms[‘val’]),

‘test’:

datasets.ImageFolder(root=testdir, transform=image_transforms[‘test’])

}

dataloaders = {

‘train’: DataLoader(data[‘train’], batch_size=128, shuffle=True),

‘val’: DataLoader(data[‘val’], batch_size=128, shuffle=True),

‘test’: DataLoader(data[‘test’], batch_size=128, shuffle=True)

}

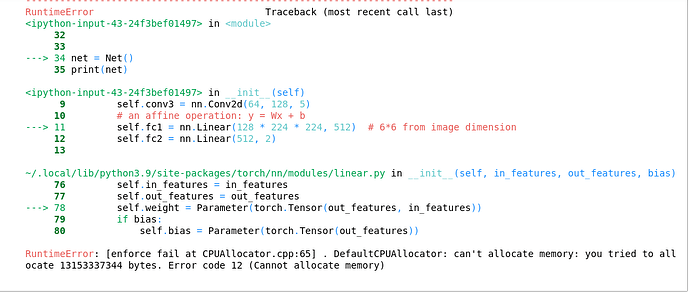

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 32, 5)

self.conv2 = nn.Conv2d(32, 64, 5)

self.conv3 = nn.Conv2d(64, 128, 5)

self.fc1 = nn.Linear(128 * 224 * 224, 512)

self.fc2 = nn.Linear(512, 2)

def forward(self, x):

# Max pooling over a (2, 2) window

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv3(x)), (2, 2))

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.softmax(x, dim=1)

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)