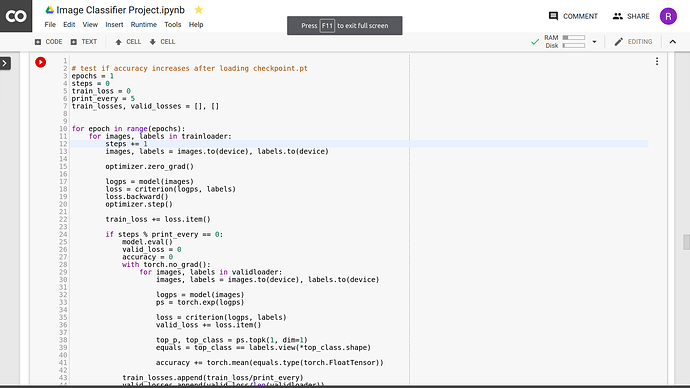

After loading the model from check point . I tried to train the model again. While training it shows error on optimizer.step()

def load_checkpoint(checkpoint_path):

model = models.vgg11(pretrained=True)

for param in model.parameters():

param.requires_grad = False

checkpoint = torch.load(path)

classifier = nn.Sequential(OrderedDict([('fc1', nn.Linear(checkpoint['input_size'], 6272)),

('relu', nn.ReLU()),

('drop1',nn.Dropout(p=0.4)),

('fc2', nn.Linear(6272, 512)),

('relu', nn.ReLU()),

('drop2',nn.Dropout(p=0.2)),

('fc3', nn.Linear(512, checkpoint['out_size'])),

('output', nn.LogSoftmax(dim=1))]))

model.classifier = classifier

model.classifier.load_state_dict(checkpoint['state_dict'])

#model.classifier.fc1.in_features = checkpoint['input_size']

#model.classifier.fc3.out_features = checkpoint['out_size']

class_to_idx = checkpoint['class_to_idx']

optimizer = optim.Adam(model.classifier.parameters(), lr = 0.001)

optimizer.load_state_dict(checkpoint['optim_state'])

return model, optimizer, class_to_idx

model, optimizer, class_to_idx = load_checkpoint(path)

idx_to_class = { v : k for k,v in class_to_idx.items()}

RuntimeError Traceback (most recent call last)

<ipython-input-7-5e78ed847bd0> in <module>()

16 loss = criterion(logps, labels)

17 loss.backward()

---> 18 optimizer.step()

19

20 train_loss += loss.item()

/usr/local/lib/python3.6/dist-packages/torch/optim/adam.py in step(self, closure)

91

92 # Decay the first and second moment running average coefficient

---> 93 exp_avg.mul_(beta1).add_(1 - beta1, grad)

94 exp_avg_sq.mul_(beta2).addcmul_(1 - beta2, grad, grad)

95 if amsgrad:

RuntimeError: expected type torch.FloatTensor but got torch.cuda.FloatTensor```