For the following code block:

##torch.autograd.set_detect_anomaly(True)

network = Network()

network.cuda()

criterion = nn.MSELoss()

optimizer = optim.Adam(network.parameters(), lr=0.0001)

loss_min = np.inf

num_epochs = 10

start_time = time.time()

for epoch in range(1,num_epochs+1):

loss_train = 0

loss_valid = 0

running_loss = 0

network.train()

for step in range(1,len(train_loader)+1):

##images, landmarks = next(iter(train_loader))

##print(type(images))

batch = next(iter(train_loader))

images, landmarks = batch['image'], batch['landmarks']

images = images.cuda()

landmarks = landmarks.view(landmarks.size(0),-1).cuda()

predictions = network(images)

# clear all the gradients before calculating them

optimizer.zero_grad()

# find the loss for the current step

loss_train_step = criterion(predictions, landmarks)

print("type(loss_train_step) is: ", type(loss_train_step))

print("loss_train_step.dtype is: ",loss_train_step.dtype)

##loss_train_step = loss_train_step.to(torch.float32)

# calculate the gradients

loss_train_step.backward()

# update the parameters

optimizer.step()

loss_train += loss_train_step.item()

running_loss = loss_train/step

print_overwrite(step, len(train_loader), running_loss, 'train')

network.eval()

with torch.no_grad():

for step in range(1,len(valid_loader)+1):

batch = next(iter(train_loader))

images, landmarks = batch['image'], batch['landmarks']

images = images.cuda()

landmarks = landmarks.view(landmarks.size(0),-1).cuda()

predictions = network(images)

# find the loss for the current step

loss_valid_step = criterion(predictions, landmarks)

loss_valid += loss_valid_step.item()

running_loss = loss_valid/step

print_overwrite(step, len(valid_loader), running_loss, 'valid')

loss_train /= len(train_loader)

loss_valid /= len(valid_loader)

print('\n--------------------------------------------------')

print('Epoch: {} Train Loss: {:.4f} Valid Loss: {:.4f}'.format(epoch, loss_train, loss_valid))

print('--------------------------------------------------')

if loss_valid < loss_min:

loss_min = loss_valid

torch.save(network.state_dict(), '/content/face_landmarks.pth')

print("\nMinimum Validation Loss of {:.4f} at epoch {}/{}".format(loss_min, epoch, num_epochs))

print('Model Saved\n')

print('Training Complete')

print("Total Elapsed Time : {} s".format(time.time()-start_time))

The error is:

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float64

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-23-0b0a62a9f8d8> in <module>

43

44 # calculate the gradients

---> 45 loss_train_step.backward()

46

47 # update the parameters

~/anaconda3/lib/python3.7/site-packages/torch/tensor.py in backward(self, gradient, retain_graph, create_graph)

183 products. Defaults to ``False``.

184 """

--> 185 torch.autograd.backward(self, gradient, retain_graph, create_graph)

186

187 def register_hook(self, hook):

~/anaconda3/lib/python3.7/site-packages/torch/autograd/__init__.py in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables)

125 Variable._execution_engine.run_backward(

126 tensors, grad_tensors, retain_graph, create_graph,

--> 127 allow_unreachable=True) # allow_unreachable flag

128

129

RuntimeError: Found dtype Double but expected Float

Exception raised from compute_types at /opt/conda/conda-bld/pytorch_1595629403081/work/aten/src/ATen/native/TensorIterator.cpp:183 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x4d (0x7fa4ec61c77d in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: at::TensorIterator::compute_types(at::TensorIteratorConfig const&) + 0x259 (0x7fa51f673ca9 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #2: at::TensorIterator::build(at::TensorIteratorConfig&) + 0x6b (0x7fa51f67744b in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #3: at::TensorIterator::TensorIterator(at::TensorIteratorConfig&) + 0xdd (0x7fa51f677abd in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #4: at::native::mse_loss_backward_out(at::Tensor&, at::Tensor const&, at::Tensor const&, at::Tensor const&, long) + 0x18a (0x7fa51f4dc71a in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #5: <unknown function> + 0xd1d610 (0x7fa4ed79f610 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #6: at::native::mse_loss_backward(at::Tensor const&, at::Tensor const&, at::Tensor const&, long) + 0x90 (0x7fa51f4d9140 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #7: <unknown function> + 0xd1d6b0 (0x7fa4ed79f6b0 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #8: <unknown function> + 0xd3f936 (0x7fa4ed7c1936 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #9: at::mse_loss_backward(at::Tensor const&, at::Tensor const&, at::Tensor const&, long) + 0x119 (0x7fa51f99bda9 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #10: <unknown function> + 0x2b5e8c9 (0x7fa5215f48c9 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #11: <unknown function> + 0x7f60d6 (0x7fa51f28c0d6 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #12: at::mse_loss_backward(at::Tensor const&, at::Tensor const&, at::Tensor const&, long) + 0x119 (0x7fa51f99bda9 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #13: torch::autograd::generated::MseLossBackward::apply(std::vector<at::Tensor, std::allocator<at::Tensor> >&&) + 0x1af (0x7fa52153052f in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #14: <unknown function> + 0x30d1017 (0x7fa521b67017 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #15: torch::autograd::Engine::evaluate_function(std::shared_ptr<torch::autograd::GraphTask>&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr<torch::autograd::ReadyQueue> const&) + 0x1400 (0x7fa521b62860 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #16: torch::autograd::Engine::thread_main(std::shared_ptr<torch::autograd::GraphTask> const&) + 0x451 (0x7fa521b63401 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #17: torch::autograd::Engine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x89 (0x7fa521b5b579 in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #18: torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x4a (0x7fa5407f699a in /home/mona/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #19: <unknown function> + 0xc9067 (0x7fa55cb45067 in /home/mona/anaconda3/lib/python3.7/site-packages/zmq/backend/cython/../../../../.././libstdc++.so.6)

frame #20: <unknown function> + 0x9609 (0x7fa55fc5c609 in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #21: clone + 0x43 (0x7fa55fb83103 in /lib/x86_64-linux-gnu/libc.so.6)

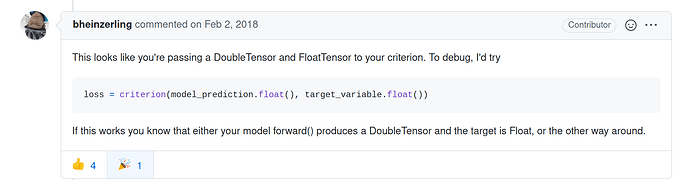

Even after I converted to float32, I get the same error:

loss_train_step = loss_train_step.to(torch.float32)

Also,

num_classes = 68 * 2 #68 coordinates X and Y flattened

class Network(nn.Module):

def __init__(self,num_classes=136):

super().__init__()

self.model_name = 'resnet18'

self.model = models.resnet18()

self.model.fc = nn.Linear(self.model.fc.in_features, num_classes)

def forward(self, x):

x = x.float()

out = self.model(x)

return out