Why am I getting this error?

RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input[16, 64, 256, 256] to have 3 channels, but got 64 channels instead

I wrote an implementation of U-net.

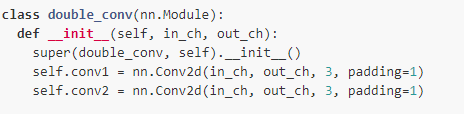

class double_conv(nn.Module):

def __init__(self, in_ch, out_ch):

super(double_conv, self).__init__()

self.conv1 = nn.Conv2d(in_ch, out_ch, 3, padding=1)

self.conv2 = nn.Conv2d(in_ch, out_ch, 3, padding=1)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

return x

class input_conv(nn.Module):

def __init__(self, in_ch, out_ch):

super(input_conv, self).__init__()

self.inp_conv = double_conv(in_ch, out_ch)

def forward(self, x):

x = self.inp_conv(x)

return x

class up(nn.Module):

def __init__(self, in_ch, out_ch):

super(up, self).__init__()

self.up_conv = nn.ConvTranspose2d(in_ch, out_ch, kernel_size=2, stride=2)

self.conv = double_conv(in_ch, out_ch)

def forward(self, x1, x2):

x1 = self.up_conv(x1)

x = torch.cat([x2, x1], dim=1)

x = self.conv(x)

return x

class down(nn.Module):

def __init__(self, in_ch, out_ch):

super(down, self).__init__()

self.pool = nn.MaxPool2d(2)

self.conv = double_conv(in_ch, out_ch)

def forward(self, x):

x = self.pool(x)

x = self.conv(x)

return x

class last_conv(nn.Module):

def __init__(self, in_ch, out_ch):

super(last_conv, self).__init__()

self.conv1 = nn.Conv2d(in_ch, out_ch, 1)

def forward(self, x):

x = self.conv1(x)

return x

class Unet(nn.Module):

def __init__(self, channels, classes):

super(Unet, self).__init__()

self.inp = input_conv(channels, 64)

self.down1 = down(64, 128)

self.down2 = down(128, 256)

self.down3 = down(256, 512)

self.down4 = down(512, 1024)

self.up1 = up(1024, 512)

self.up2 = up(512, 256)

self.up3 = up(256, 128)

self.up4 = up(128, 64)

self.out = last_conv(64, classes)

def forward(self, x):

x1 = self.inp(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up1(x, x1)

x = self.out(x)

return x

model = Unet(3, 1)

This is the training loop

for epoch in range(5):

for i, data in enumerate(trainloader):

inputs, labels = data

inputs = Variable(inputs).cuda()

labels = Variable(labels).cuda()

# forward + backward + optimize

# zeroes the gradient buffers of all parameters

optimizer.zero_grad()

#forward pass

outputs = model_pytorch(inputs)

# calculate the loss

loss = loss_function(outputs, labels)

# backpropagation

loss.backward()

# Does the update after calculating the gradients

optimizer.step()

if (i+1) % 5 == 0: # print every 100 mini-batches

print('[%d, %5d] loss: %.4f' % (epoch, i+1, loss.data[0]))