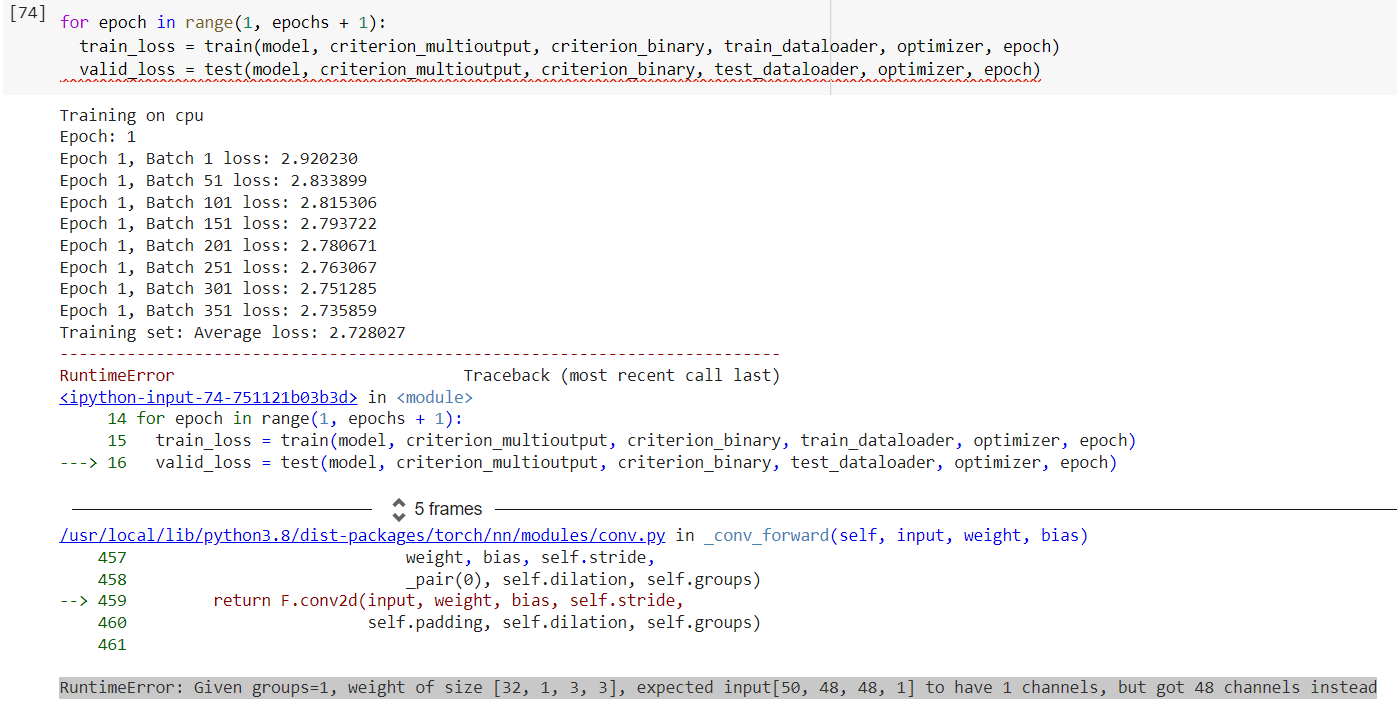

I am facing this problem at the time of testing. While training the data it works fine, but at the time of testing the data it arise this error.

‘’’

class test_dataset(Dataset):

def init(self, age_label_test, gen_label_test, img_test):

self.age_label_test = np.array(age_label_test)

self.gen_label_test = np.array(gen_label_test)

self.img_test = np.array(img_test)

def len(self):

return len(self.img_test)

def getitem(self, index):

age_label_test = self.age_label_test[index]

gen_label_test = self.gen_label_test[index]

img_test = self.img_test[index].reshape(48,48,1)

#img = self.transform(X_train)

return img_test, age_label_test, gen_label_test

test_data = test_dataset(y_age_test[:], y_gender_test[:],

X_test[:])

test_dataloader = DataLoader(test_data, batch_size = 50, shuffle = True)

‘’’

‘’’

import torch.nn as nn

from torchsummary import summary

import timm

from timm.models.layers.classifier import ClassifierHead

import torch.nn.functional as F

Building Model

class Net(nn.Module):

def init(self):

super(Net, self).init()

self.conv1 = nn.Conv2d(in_channels = 1, out_channels = 32, kernel_size = 3, padding=1, stride=1)

self.relu = nn.ReLU()

self.maxpool = nn.MaxPool2d(kernel_size = 2)

self.conv2 = nn.Conv2d(in_channels = 32, out_channels = 64, kernel_size = 3, padding=1, stride=1)

self.conv3 = nn.Conv2d(in_channels = 64, out_channels = 128, kernel_size = 3, padding=1, stride=1)

self.conv4 = nn.Conv2d(in_channels = 128, out_channels = 256, kernel_size = 3, padding=1, stride=1)

#nn.Flatten()

self.fc1 = nn.Linear(in_features = 256*3*3, out_features = 1152)

self.fc2 = nn.Linear(in_features = 1152, out_features = 512)

self.linear1 = nn.Linear(in_features = 512, out_features = age_features) # For age class output

self.linear2 = nn.Linear(in_features = 512, out_features = gen_features) # For gender class output

def forward(self, x):

out = self.conv1(x)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv2(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv3(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv4(out)

out = self.relu(out)

out = self.maxpool(out)

#print(out.shape)

out = out.view(x.size(0),-1)

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

label1 = self.linear1(out) # Age output

label2 = self.linear2(out) # Gender output

return {'label1': torch.softmax(label1,dim=1), 'label2': label2}

‘’’

‘’’

age_accuracy_list =

gender_accuracy_list =

validation_loss_list=

def test(model, criterion1, criterion2, test_dataloader, optimizer, epoch):

Switch the model to evaluation mode

model.eval()

correct_1 = 0

correct_2 = 0

total_1 = 0

total_2 = 0

valid_loss = 0

with torch.no_grad():

for batch_idx, (data, target1, target2) in enumerate(test_dataloader):

data, target1, target2 = data.to(device), target1.to(device), target2.to(device)

data = data.requires_grad_() # Load images(for accuracy)

output = model(data.float())

_, predicted1 = torch.max(output['label1'], 1)

_, predicted2 = torch.max(output['label2'], 1)

label1_hat = output['label1']

label2_hat = output['label2']

total_1 += target1.size(0)

total_2 += target2.size(0)

correct_1 += torch.sum(predicted1 == target1).item()

correct_2 += torch.sum(predicted2 == target2).item()

age_accuracy = 100 * correct_1 // total_1

gender_accuracy = 100 * correct_2 // total_2

# calculate loss

loss1 = criterion1(label1_hat, target1)

loss2 = criterion2(label2_hat, target2)

loss = loss1+loss2

valid_loss = valid_loss + ((1 / (batch_idx + 1)) * (loss.data - valid_loss))

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f} \tAge_Accuracy: {} \tGender_Accuracy: {}'.format(

epoch, train_loss, valid_loss, age_accuracy, gender_accuracy))

age_accuracy_list.append(age_accuracy)

gender_accuracy_list.append(gender_accuracy)

validation_loss_list.append(valid_loss)

return valid_loss, age_accuracy, gender_accuracy

‘’’