Please see the code below.

class residualBlock(nn.Module):

def init(self, in_channels=64, k=3, n=64, s=1):

super(residualBlock, self).init()

m = OrderedDict()

m[‘conv1’] = nn.Conv2d(in_channels, n, k, stride=s, padding=1)

m[‘bn1’] = nn.BatchNorm2d(n)

m[‘ReLU1’] = nn.ReLU(inplace=True)

m[‘conv2’] = nn.Conv2d(n, n, k, stride=s, padding=1)

m[‘bn2’] = nn.BatchNorm2d(n)

self.group1 = nn.Sequential(m)

self.relu = nn.Sequential(nn.ReLU(inplace=True))

def forward(self, x):

out = self.group1(x) + x

out = self.relu(out)

return out

class Generator(nn.Module):

def init(self, n_residual_blocks):

super(Generator, self).init()

self.n_residual_blocks = n_residual_blocks

#self.upsample_factor = upsample_factor

self.conv1 = nn.Conv2d(6, 64, 9, stride=1, padding=4) #9 4

self.bn1 = nn.BatchNorm2d(64)

self.relu1 = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(64, 64, 3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(64)

self.relu2 = nn.ReLU(inplace=True)

self.conv3 = nn.Conv2d(64, 64, 3, stride=1, padding=1)

self.bn3 = nn.BatchNorm2d(64)

self.relu3 = nn.ReLU(inplace=True)

for i in range(self.n_residual_blocks):

self.add_module('residual_block' + str(i + 1), residualBlock())

self.conv4 = nn.Conv2d(64, 32, 3, stride=1, padding=1)

self.bn4 = nn.BatchNorm2d(32)

self.relu4 = nn.ReLU(inplace=True)

self.conv5 = nn.Conv2d(32, 32, 3, stride=1, padding=1)

self.bn5 = nn.BatchNorm2d(32)

self.relu5 = nn.ReLU(inplace=True)

self.conv6 = nn.Conv2d(32, 32, 3, stride=1, padding=1) #64,2,3 for pair

self.bn6 = nn.BatchNorm2d(32)

self.relu6 = nn.ReLU(inplace=True)

self.fc = nn.Linear(32*32*32,2*32*32) # 32768,2048

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = swish(self.relu1(self.bn1(self.conv1(x))))

print("1= ",x.size())

x = swish(self.relu2(self.bn2(self.conv2(x))))

print("2= ",x.size())

x = swish(self.relu3(self.bn3(self.conv3(x))))

print("3= ",x.size())

y = x.clone()

for i in range(self.n_residual_blocks):

y = self.__getattr__('residual_block' + str(i + 1))(y)

x = swish(self.relu4(self.bn4(self.conv4(y)))) # + x

print("4= ",x.size())

x = swish(self.relu5(self.bn5(self.conv5(x))))

print("5= ",x.size())

output_map = swish(self.relu6(self.bn6(self.conv6(x))))

print("output_map= ",output_map.size())

flattened_output = output_map.view(output_map.size(0), -1) # Flatten the output

fc_output = self.fc(flattened_output)

print("fc_output= ",fc_output.size())

fc_output = self.sigmoid(fc_output) # Apply sigmoid activation

fc_output = fc_output.view(x.size(0), 2,128*128) # Reshape output to (batch_size, 2, 128, 128)

return fc_output

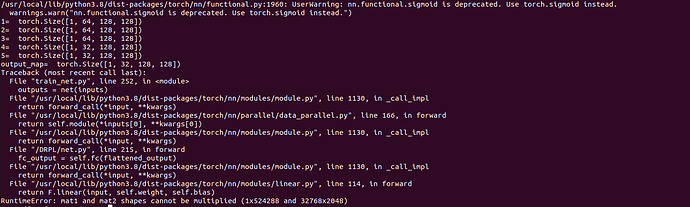

I am getting error as

My input image size is 128*128. there are two input images, and correspondingly two output images of the same size.

Please tell where I am wrong