who can help me?

import numpy as np

from PIL import Image

import glob

import torch

import torch.nn as nn

import torch.optim

from torch.autograd import Variable

from torch.utils.data import DataLoader, Dataset

import torch.nn.functional as F

from torchvision import transforms as T

def read_data():

"""

read img_file data

:return: img_path, img_name

"""

train_data = glob.glob('../train-images/*.jpeg')

train_label = np.array(

[train_data[index].split('/')[-1].split('.')[0].split('_')[0] for index in

range(len(train_data))])

return train_data, train_label

out_place = (

"0", "1", "2", "3", "4", "5", "6", "7", "8", "9", 'a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q',

'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z', 'A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M', 'N', 'O', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z')

transform = T.Compose([

# T.Resize((128,128)),

T.ToTensor(),

T.Normalize(std=[0.5, 0.5, 0.5], mean=[0.5, 0.5, 0.5])

])

def one_hot(word: str) -> np.array:

tmp = []

# for i in range(len(word)):

for i in range(4):

item_tmp = [0 for x in range(144)] # [0 for x in range(len(word) * len(out_place))]

word_idx = out_place.index(word[i].lower())

item_tmp[i*36+word_idx] = 1

tmp.append(item_tmp)

return np.array(tmp)

class DataSet(Dataset):

def __init__(self):

self.img_path, self.label = read_data()

def __getitem__(self, index):

img_path = self.img_path[index]

img = Image.open(img_path).convert("RGB")

img = transform(img)

label = torch.from_numpy(one_hot(self.label[index])).float()

return img, label

def __len__(self):

return len(self.img_path)

data = DataSet()

data_loader = DataLoader(data, shuffle=True, batch_size=64, drop_last=True)

class CNN_Network(nn.Module):

def __init__(self):

super(CNN_Network, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(3, 16, stride=1, kernel_size=3, padding=1),

nn.BatchNorm2d(16),

nn.ReLU(inplace=True)

)

self.layer2 = nn.Sequential(

nn.Conv2d(16, 32, stride=1, kernel_size=3, padding=1),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

nn.MaxPool2d(stride=2, kernel_size=2), # 30 80

)

self.layer3 = nn.Sequential(

nn.Conv2d(32, 64, stride=1, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2), # 15 40

)

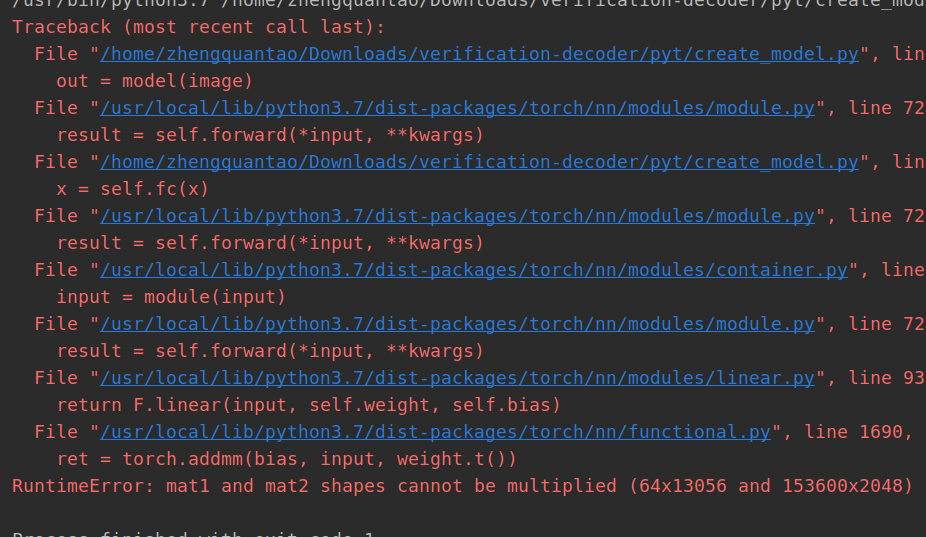

self.fc = nn.Sequential(

nn.Linear(256 * 15 * 40, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, 1024),

nn.ReLU(inplace=True),

nn.Linear(1024, 40)

)

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

x = F.softmax(x, dim=-1)

return x

model = CNN_Network()

model.train()

optimizer = torch.optim.Adam(model.parameters(), lr=0.002)

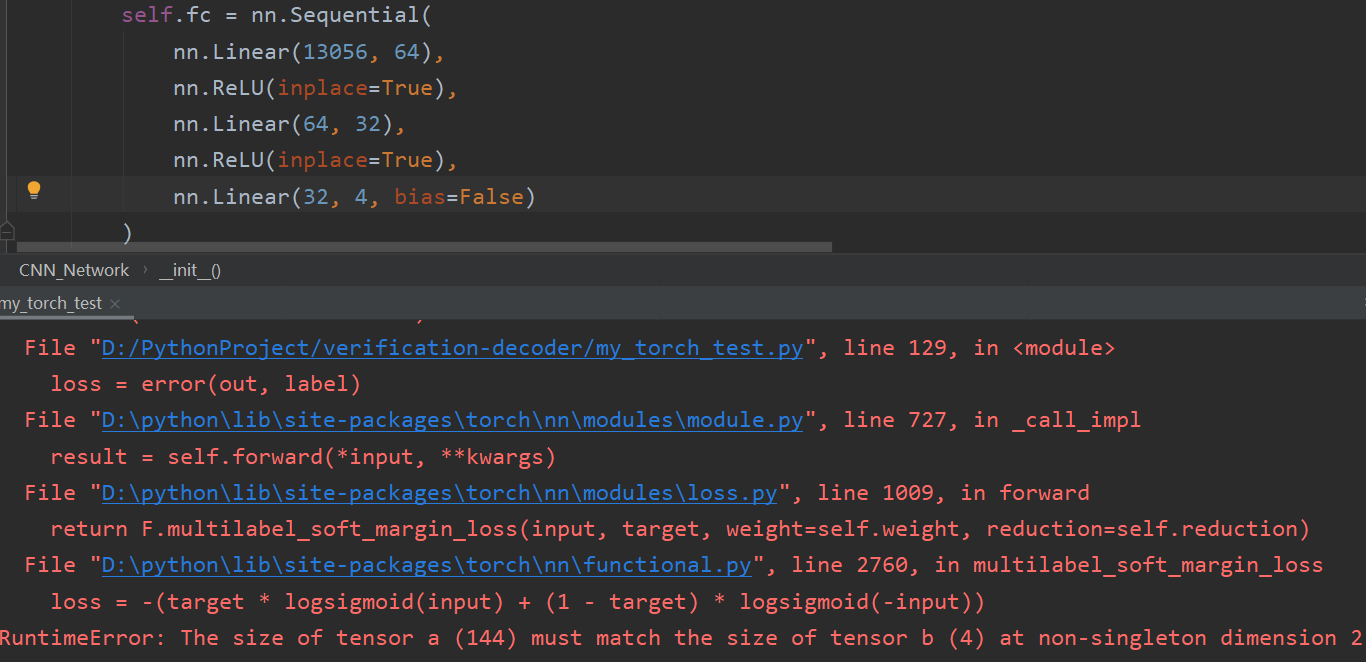

error = nn.MultiLabelSoftMarginLoss()

for i in range(5):

for (batch_x, batch_y) in data_loader:

optimizer.zero_grad()

image = Variable(batch_x)

label = Variable(batch_y)

out = model(image)

loss = error(out, label)

print(loss)

loss.backward()

optimizer.step()

torch.save(model.state_dict(), "model.pth")

my image size [70, 26] and one of images name is okzi.jpeg

I am first touch pytorch so i don`t understand this question

Summary

This text will be hidden