I got this error when i run my code "RuntimeError: mat1 dim 1 must match mat2 dim 0 ". Can anyone help to solve this problem ?

The model is :

class Flatten(nn.Module):

def forward(self, input):

return input.view(input.size(0), -1)

class Unflatten(nn.Module):

def __init__(self, channel, height, width):

super(Unflatten, self).__init__()

self.channel = channel

self.height = height

self.width = width

def forward(self, input):

return input.view(input.size(0), self.channel, self.height, self.width)

class ConvVAE(nn.Module):

def __init__(self, latent_size):

super(ConvVAE, self).__init__()

self.latent_size = latent_size

self.encoder = nn.Sequential(

nn.Conv2d(19, 64, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(64, 128, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

Flatten(),

nn.Linear(80000, 1024),

nn.ReLU()

)

# hidden => mu

self.fc1 = nn.Linear(1024, self.latent_size)

# hidden => logvar

self.fc2 = nn.Linear(1024, self.latent_size)

self.decoder = nn.Sequential(

nn.Linear(self.latent_size, 1024),

nn.ReLU(),

nn.Linear(1024,80000),

nn.ReLU(),

Unflatten(128, 25, 25),

nn.ReLU(),

nn.ConvTranspose2d(128, 64, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(64, 19, kernel_size=3, stride=2, padding=1),

nn.Sigmoid()

)

def encode(self, x):

h = self.encoder(x)

mu, logvar = self.fc1(h), self.fc2(h)

return mu, logvar

def decode(self, z):

z = self.decoder(z)

return z

def reparameterize(self, mu, logvar):

if self.training:

std = torch.exp(0.5 * logvar)

eps = torch.randn_like(std)

return eps.mul(std).add_(mu)

else:

return mu

def forward(self, x):

mu, logvar = self.encode(x)

z = self.reparameterize(mu, logvar)

return self.decode(z), mu, logvar

The main code is :

def main():

parser = argparse.ArgumentParser(description='Convolutional VAE MNIST Example')

parser.add_argument('--result_dir', type=str, default='results', metavar='DIR',

help='output directory')

parser.add_argument('--batch_size', type=int, default=100, metavar='N',

help='input batch size for training (default: 128)')

parser.add_argument('--epochs', type=int, default=10, metavar='N',

help='number of epochs to train (default: 10)')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--resume', default='', type=str, metavar='PATH',

help='path to latest checkpoint (default: None')

# model options

parser.add_argument('--latent_size', type=int, default=32, metavar='N',

help='latent vector size of encoder')

parser.add_argument("-f", "--fff", help="a dummy argument to fool ipython", default="1")

args = parser.parse_args()

torch.manual_seed(args.seed)

kwargs = {'num_workers': 1, 'pin_memory': True} if cuda else {}

train_dataset = CustomDataset(train_image_paths, train_mask_paths, transform=transform, train=True)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=1, shuffle=True, num_workers=0)

test_dataset = CustomDataset(test_image_paths, test_mask_paths, transform=transform, train=False)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=1, shuffle=False, num_workers=0)

model = ConvVAE(args.latent_size).to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

start_epoch = 0

best_test_loss = np.finfo('f').max

# optionally resume from a checkpoint

if args.resume:

if os.path.isfile(args.resume):

print('=> loading checkpoint %s' % args.resume)

checkpoint = torch.load(args.resume)

start_epoch = checkpoint['epoch'] + 1

best_test_loss = checkpoint['best_test_loss']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

print('=> loaded checkpoint %s' % args.resume)

else:

print('=> no checkpoint found at %s' % args.resume)

writer = SummaryWriter()

for epoch in range(start_epoch, args.epochs):

train_loss = train(epoch, model, train_loader, optimizer, args)

test_loss = test(epoch, model, test_loader, writer, args)

# logging

writer.add_scalar('train/loss', train_loss, epoch)

writer.add_scalar('test/loss', test_loss, epoch)

print('Epoch [%d/%d] loss: %.3f val_loss: %.3f' % (epoch + 1, args.epochs, train_loss, test_loss))

is_best = test_loss < best_test_loss

best_test_loss = min(test_loss, best_test_loss)

save_checkpoint({

'epoch': epoch,

'best_test_loss': best_test_loss,

'state_dict': model.state_dict(),

'optimizer': optimizer.state_dict(),

}, is_best)

with torch.no_grad():

sample = torch.randn(64, 32).to(device)

sample = model.decode(sample).cpu()

img = make_grid(sample)

writer.add_image('sampling', img, epoch)

save_image(sample.view(64, 1, 28, 28), 'results/sample_' + str(epoch) + '.png')

if __name__ == '__main__':

main()

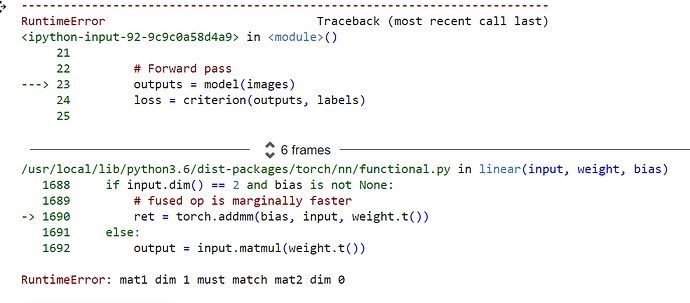

And the error code is :

train: 0%| | 0/7 [00:00<?, ?it/s]

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-10-ea45d861fe2d> in <module>()

84

85 if __name__ == '__main__':

---> 86 main()

87

9 frames

/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py in linear(input, weight, bias)

1688 if input.dim() == 2 and bias is not None:

1689 # fused op is marginally faster

-> 1690 ret = torch.addmm(bias, input, weight.t())

1691 else:

1692 output = input.matmul(weight.t())

RuntimeError: mat1 dim 1 must match mat2 dim 0