How to map 1-D “y” labels (0-4) vector to logits output from NN for a Loss function?

Hello,

I am new to PyTorch and ML. After reading many forums and materials, I cannot find the best way to get a solution.

I have following INPUTS:

train file stored in h5 format with following labels:

[y] - ground truth labels (integers 0,1,2,3,4) - isolated as separate tensor for training purposes (Y), through pandas DF

[x1:x120] - feature vectors x1-x120, which gives 120 features as input vector, isolated as well for training (X1-X120 tensor coming from pandas DF)

around 45000 training instances (rows)

So I have constructed fc NN like this:

L1 - [120-100] nodes for input vectors

L2 - [100-50]

L3 - [50-20]

L4 - [20-5] nodes for outputs, either logits or probabilities after applying softmax

Each layer being activated through ReLU, no softmax at the end in 1 version, just the output itself (logits, right?)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# an affine operation: y = Wx + b

self.fc1 = nn.Linear(120, 100)

self.fc2 = nn.Linear(100, 50)

self.fc3 = nn.Linear(50, 20)

self.fc4 = nn.Linear(20, 5)

def forward(self, x):

#x = x.view(-1, 120)

out1 = F.relu(self.fc1(x))

out2 = F.relu(self.fc2(out1))

out3 = F.relu(self.fc3(out2))

y_pred = self.fc4(out3)

return F.log_softmax(y_pred)

#return y_pred

model = Net()

print(model)

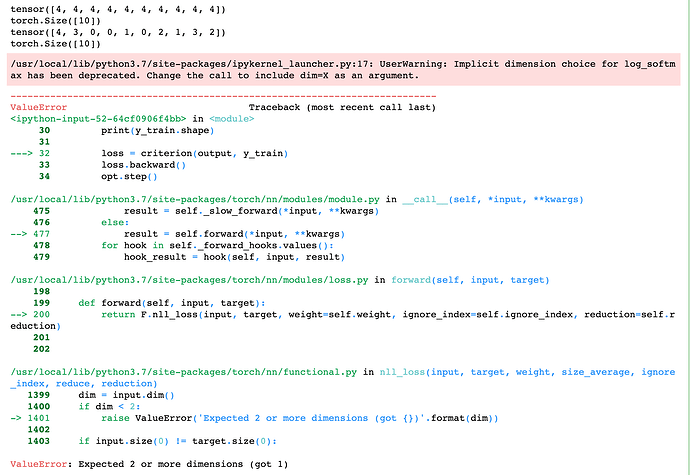

What I am struggling with, is how to convert either logits or 1-D y labels array to match dimensions for CrossEntropyLoss or even NLL when applied Softmax?

It gets runtime error - multi target not supported etc… (1D vs 5D)

Shall I convert ground truth labels to one-hot coding, having some array like:

y

[00100] = 2

[01000] = 1

[00001] = 4

???

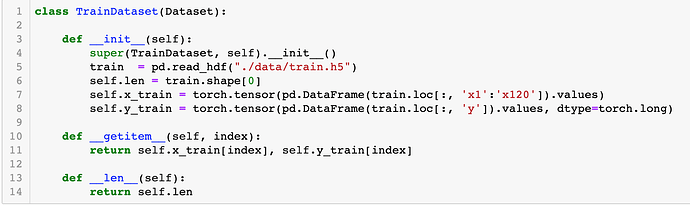

My Data Loader:

dataset = TrainDataset()

train_loader = DataLoader(dataset=dataset,

batch_size=batch_size,

shuffle=True,

num_workers=2)

My Training loop:

///

criterion = torch.nn.NLLLoss()

#criterion = torch.nn.CrossEntropyLoss()

for epoch in range(num_epochs):

for batch_idx, (x_train, y_train) in enumerate(train_loader):

x_train, y_train = Variable(x_train), Variable(y_train)

opt.zero_grad()

output = model(x_train)

print(output)

#y_train = y_train.squeeze_()

loss = criterion(output, y_train)

loss.backward()

opt.step()

print(‘Epoch %d: Loss %.5lf’ % (epoch, loss))

///

I tried to squeeze_ the y labels, but it does not make sense and I get same values over iterations…

I am really lost :(( Can you help?