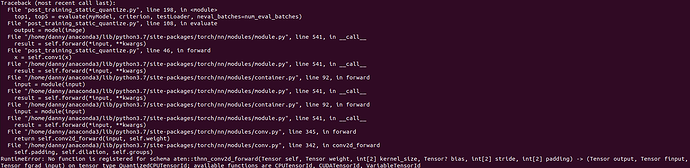

Hello! I’m just following the tutorial to quantize, and I got the same error.

I try to use a simple Conv + BN + ReLU like this

class ConvBNReLU(nn.Sequential):

def __init__(self, in_channel, out_channel, kernel_size, stride):

padding = (kernel_size - 1) // 2

super(ConvBNReLU, self).__init__(

nn.Conv2d(

in_channel,

out_channel,

kernel_size,

stride,

padding,

bias=False

),

nn.BatchNorm2d(out_channel),

nn.ReLU(inplace=False)

)

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

# input size 3 * 32 * 32

self.conv1 = ConvBNReLU(3, 16, 3, 1)

self.conv2 = ConvBNReLU(16, 32, 3, 1)

self.quant = QuantStub()

self.dequant = DeQuantStub()

self.out = nn.Linear(32 * 32 * 32, 10)

def forward(self, x):

x = self.quant(x)

x = self.conv1(x)

x = self.conv2(x)

x = x.contiguous()

x = x.view(-1, 32 * 32 * 32)

x = self.out(x)

x = self.dequant(x)

return x

def fuse_model(self):

for m in self.modules():

if type(m) == ConvBNReLU:

torch.quantization.fuse_modules(m, ['0', '1', '2'], inplace=True)

model after adding observer

ConvBNReLU(

(0): ConvBnReLU2d(

(0): Conv2d(

3, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False

(observer): MinMaxObserver(min_val=None, max_val=None)

)

(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(

(observer): MinMaxObserver(min_val=None, max_val=None)

)

)

(1): Identity()

(2): Identity()

)

model after quantized convert

ConvBNReLU(

(0): ConvBnReLU2d(

(0): Conv2d(

3, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False

(observer): MinMaxObserver(min_val=-3.1731998920440674, max_val=3.2843430042266846)

)

(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(

(observer): MinMaxObserver(min_val=0.0, max_val=13.862381935119629)

)

)

(1): Identity()

(2): Identity()

)

The remaining works are just following the tutorial.

I wonder why the layers did not be converted to quantized layer.