Sir this is my model architecture

Encoder

[Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(512, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(1024, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))]

[Conv2d(1024, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))]

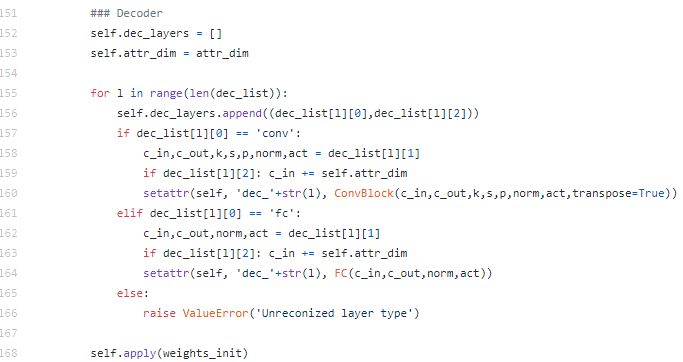

Decoder

[ConvTranspose2d(1027, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(1024, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(64, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), Tanh()]

Feature Discriminator

[Linear(in_features=1024, out_features=512, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=512, out_features=256, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=256, out_features=128, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=128, out_features=64, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=64, out_features=3, bias=True)]

Pixel Discriminator

[Conv2d(3, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(16, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(32, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=16384, out_features=512, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=512, out_features=1, bias=True)]

[Linear(in_features=512, out_features=3, bias=True)]

when i set the input features as 16384, the architecture is now:

Encoder

[Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(512, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[Conv2d(1024, 16384, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))]

[Conv2d(1024, 16384, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))]

Decoder

[ConvTranspose2d(16387, 1024, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(1024, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True), LeakyReLU(negative_slope=0.2)]

[ConvTranspose2d(64, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), Tanh()]

Feature Discriminator

[Linear(in_features=16384, out_features=512, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=512, out_features=256, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=256, out_features=128, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=128, out_features=64, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=64, out_features=3, bias=True)]

Pixel Discriminator

[Conv2d(3, 16, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(16, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(32, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1)), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=16384, out_features=512, bias=True), LeakyReLU(negative_slope=0.2)]

[Linear(in_features=512, out_features=1, bias=True)]

[Linear(in_features=512, out_features=3, bias=True)]

and i get the below error:

RuntimeError: size mismatch, m1: [96 x 262144], m2: [16384 x 512] at /opt/conda/conda-bld/pytorch_1556653145446/work/aten/src/THC/generic/THCTensorMathBlas.cu:268