========== fold: 0 training ==========

========== fold: 0 training ==========

CQT kernels created, time used = 0.0120 seconds

CQT kernels created, time used = 0.0118 seconds

Downloading: “https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b7_ns-1dbc32de.pth” to /root/.cache/torch/hub/checkpoints/tf_efficientnet_b7_ns-1dbc32de.pthRuntimeError Traceback (most recent call last)

in

1 if name == ‘main’:

----> 2 main()in main()

19 for fold in range(CFG.n_fold):

20 if fold in CFG.trn_fold:

—> 21 _oof_df = train_loop(train, fold)

22 oof_df = pd.concat([oof_df, _oof_df])

23 LOGGER.info(f"========== fold: {fold} result ==========")in train_loop(folds, fold)

71

72 # eval

—> 73 avg_val_loss, preds = valid_fn(valid_loader, model, criterion, device)

74

75 if isinstance(scheduler, ReduceLROnPlateau):in valid_fn(valid_loader, model, criterion, device)

103 preds =

104 start = end = time.time()

→ 105 for step, (images, labels) in enumerate(valid_loader):

106 # measure data loading time

107 data_time.update(time.time() - end)/opt/conda/lib/python3.7/site-packages/torch/utils/data/dataloader.py in next(self)

433 if self._sampler_iter is None:

434 self._reset()

→ 435 data = self._next_data()

436 self._num_yielded += 1

437 if self._dataset_kind == _DatasetKind.Iterable and \/opt/conda/lib/python3.7/site-packages/torch/utils/data/dataloader.py in _next_data(self)

1083 else:

1084 del self._task_info[idx]

→ 1085 return self._process_data(data)

1086

1087 def _try_put_index(self):/opt/conda/lib/python3.7/site-packages/torch/utils/data/dataloader.py in _process_data(self, data)

1109 self._try_put_index()

1110 if isinstance(data, ExceptionWrapper):

→ 1111 data.reraise()

1112 return data

1113/opt/conda/lib/python3.7/site-packages/torch/_utils.py in reraise(self)

426 # have message field

427 raise self.exc_type(message=msg)

→ 428 raise self.exc_type(msg)

429

430RuntimeError: Caught RuntimeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File “/opt/conda/lib/python3.7/site-packages/torch/utils/data/_utils/worker.py”, line 198, in _worker_loop

data = fetcher.fetch(index)

File “/opt/conda/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py”, line 47, in fetch

return self.collate_fn(data)

File “/opt/conda/lib/python3.7/site-packages/torch/utils/data/_utils/collate.py”, line 63, in default_collate

return default_collate([torch.as_tensor(b) for b in batch])

File “/opt/conda/lib/python3.7/site-packages/torch/utils/data/_utils/collate.py”, line 55, in default_collate

return torch.stack(batch, 0, out=out)

RuntimeError: stack expects each tensor to be equal size, but got [182, 193] at entry 0 and at entry 1

Thank you very much for your help.

It may be a little difficult to understand. I will explain step by step, so if you don’t understand, please ask me right away.

The conclusion is that I would like to do the preprocessing individually and then train the Model at once in the pth and npy files without reading the class, init, or file.

1, “bins_per_octave”: I want to change the 8 part to 32 and clean it up.

2,There is not enough memory. (This is an image.)

3,I can’t clear the cache for some reason. (I don’t know)

4、Let’s save this image as npy,pth and try to train it.

5、So I saved it, and when I tried to train it, I got this error.

Preliminaries

Here is the model. =>The model is here.

1、The data is the class traindataset

====================================================

Dataset

====================================================

class TrainDataset(Dataset):

def init(self, df, transform=None):

self.df = df

self.file_names = df[‘file_path’].values

self.labels = df[CFG.target_col].values

self.wave_transform = CQT1992v2(**CFG.qtransform_params)

self.transform = transform

def __len__(self):

return len(self.df)

def apply_qtransform(self, waves, transform):

waves = np.hstack(waves)

waves = waves / np.max(waves)

waves = torch.from_numpy(waves).float()

image = transform(waves)

return image

def __getitem__(self, idx):

file_path = self.file_names[idx].

waves = np.load(file_path)

image = self.apply_qtransform(waves, self.wave_transform)

image = image.squeeze().numpy()

if self.transform:

image = self.transform(image=image)['image'].

label = torch.tensor(self.labels[idx]).float()

return image, label

These are the return values of image and label of

I saved both of these in validation and train.

In the second example, we have

Loaded.

def get_path():

valid_image = np.load(‘…/input/train-bandpass/test_bandpass.npy’)

train_image = np.load(‘…/input/train-bandpass/train_bandpass.npy’)

valid_label = torch.load(‘…/input/train-bandpass/test_bandpass.pth’)

train_label = torch.load(‘…/input/train-bandpass/train_f_bandpass.pth’)

return train_image, train_label

def get_path_va():

valid_image = np.load(‘…/input/train-bandpass/test_bandpass.npy’)

train_image = np.load(‘…/input/train-bandpass/train_bandpass.npy’)

valid_label = torch.load(‘…/input/train-bandpass/test_bandpass.pth’)

train_label = torch.load(‘…/input/train-bandpass/train_f_bandpass.pth’)

train_dataset = TrainDataset(train_folds, transform=get_transforms(data='train'))

valid_dataset = TrainDataset(valid_folds, transform=get_transforms(data='train'))

#This is the individual part!

train_dataset=get_path()

valid_dataset=get_path_va()

train_loader = DataLoader(train_dataset,

batch_size=CFG.batch_size,

shuffle=True,

num_workers=CFG.num_workers, pin_memory=True, drop_last=True)

valid_loader = DataLoader(valid_dataset,

batch_size=CFG.batch_size * 2,

shuffle=False,

num_workers=CFG.num_workers, pin_memory=True, drop_last=False)

3, I got an error. (The question is. ;.

4,I would like to know how I can read this.

Normally, I would do class and init, read the whole file, and do the preprocessing there, but it became too heavy, and the preprocessing alone took about 10 hours, so I want to do it separately.

If we can do this, we can save the human race.

Please help me.

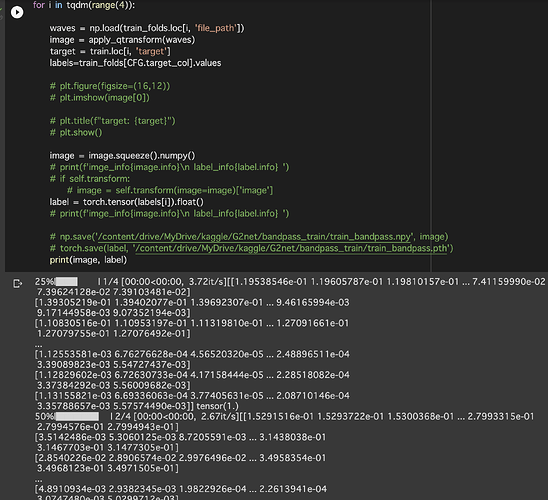

5、The way to save, image, and label is like this.