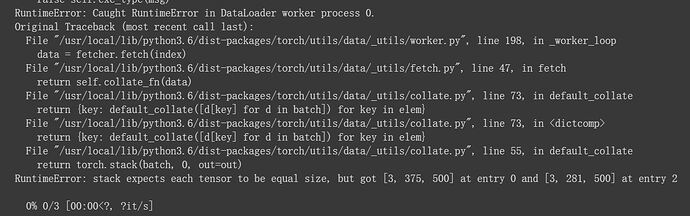

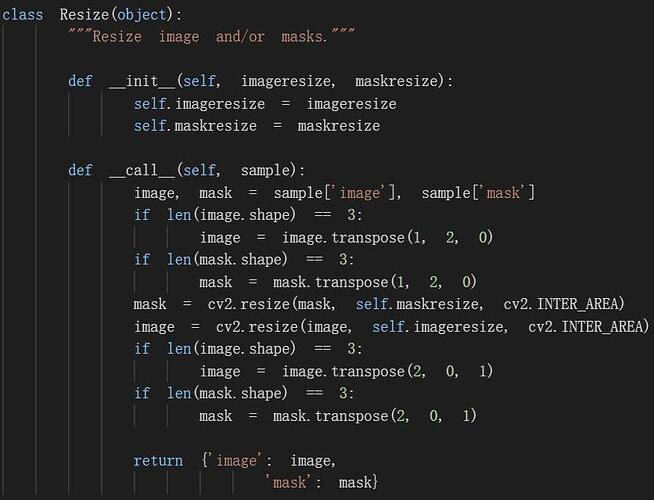

I am running the model (the image all 320*480)using part of voc datasets(different height and width), the resize part seems not to work, how should I edit it and make it work?

Is it possible to post ur entire code coz I don’t think the error is in the block of code u posted

Your resize code works just fine to me

What exactly are u feeding into ur network?

Are u feeding both the mask and image?

Hi. I think this error is due to different sized images at input. Make sure to resize the images and the mask to a common size using the Resize() transform before training the model.