Why not just load the entire sequence (or the longest distance you can have attn) at once?

Yeah! I get your point now. I should load them once instead of save them before. Seq-to-seq model with attention tutorial also helps.

Thanks very much !

HI

I’ve got a strange problem

When I run a python script, even i use the default value for retain_graph,it goes well without reporting any problem,here is my code

for i in range(epoch):

h = torch.mm(X, w1)

h_relu = h.clamp(min=0)

y_pred = torch.mm(h_relu, w2)

loss = (y_pred - y).pow(2).sum()

if i % 10000 == 0:

print('epoch: {} loss: {}'.format(i, loss.data))

loss.backward()

........

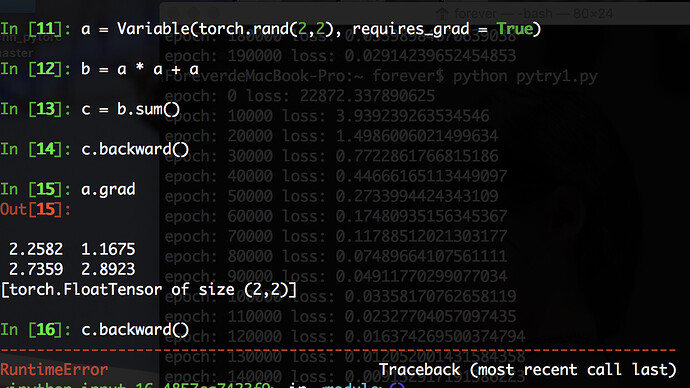

but in command line, i must set retain_graph to be True or there will be the error concernd by Peterd

But why?I think i need to set retain_graph to be True everywhere

So no you should not set retain_graph everywhere. If this error is not raised, that means that everything is fine. If it is raised, that means that you did something wrong in your code.

The difference I guess is in the way you define your Variables in the script or in command line.

So here the bug in your code is that you try to backprop part of the graph twice. So if you actually want to backprop part of the graph twice, then the first time you call .backward() you should set retain_graph=True. If you were not expecting to backprop part of the graph twice, then that means that your implementation is doing something wrong somewhere because it is actually doing it in practice.

@albanD

Really sorry for my ambiguous expression:sweat_smile:

I wonder that why neither in python script and command line nor i set retain_graph to be True

and got different results,the script run as i expect while in the command i find such a error?

What is the difference between the two?In the opnion of mine, they should act the same:thinking:

Is that for in every iteration in the loop i got a new graph?So no matter what value the retain_graph is, the script will always act well?

In pytorch, every time you perform a computation with Variables, your create a graph, then if you call backward on the last Variable, it will traverse this graph to compute the gradients for everything in it (and delete the graph as it goes through it if retain_graph=False). So in your command line, you created a single graph, trying to backprop twice through it (withour retain_graph) so it will fail.

If now inside your forward loop you do redo the forward computation, then the Variable on which you call backward is not the same, and the graph attached to it is not the same as the previous iteration one. So no error here.

The common mistake (the would raise the mentioned error while you’re not supposed to share graph) that can happen is that you perform some computation just before the loop, and so even though you create new graphs in the loop, they share a common part out of the loop like below:

a = torch.rand(3,3)

# This will be share by both iterations and will make the second backward fail !

b = a * a

for i in range(10):

d = b * b

# The first here will work but the second will not !

d.backward()

Thanks a lot!

Perfect answer!

Wish u a good day!

I’m getting this same issue and cant quite figure out where the re-use of an already cleared variable could be, am I missing something obvious?

code:

def train(epoch, model, train_loader, optimizer, criterion, summary, use_gpu=False, log_interval=10):

correct = 0

total = 0

for idx, (x, y) in enumerate(train_loader):

y = y.squeeze(1)

x, y = Variable(x), Variable(y)

x = x.cuda() if use_gpu else x

y = y.cuda() if use_gpu else y

preds = model(x)

loss = criterion(preds, y)

loss.backward(retain_graph=True)

optimizer.step()

optimizer.zero_grad()

# TODO: turn this part into callbacks

if idx % log_interval == 0:

# Log loss

index = (epoch * len(train_loader)) + idx + 1

avg_loss = loss.data.mean()

summary.add_scalar('train/loss', avg_loss, index)

# Log accuracy

total += len(x)

pred_classes = torch.max(preds.data, 1)[1]

correct += (pred_classes == y.data).sum()

acc = correct / total

summary.add_scalar('train/acc', acc, index)

Without retain_graph=True I get the same exception as above

Very elegant and interesting example. So if I want to backward more than once without retain_graph, I need to redo the computation from all leaves?

Yes, because what a backward without retrain graph is basically a “backward in which you delete the graph as you go along”.

What are the ’ intermediary results ’ exactly?

This process is giving me vertigo. What exactly happens that creates and then deletes the ‘results’?

Intermediary results are values from the forward pass that are needed to compute the backward pass.

For example, if your forward pass looks like this and you want gradients for the weights.

middle_result = first_part_of_net(inp)

out = middle_result * weights

When computing the gradients, you need the value of middle_result. And so it needs to be stored during the forward pass. This is what I call intermediary results.

These intermediary results are created whenever you perform operations that require some of the forward tensors to compute their backward pass.

To reduce memory usage, during the backward pass, these are deleted as soon as they are not needed anymore (of course if you use this Tensor somewhere else in your code you will still have access to it, but it won’t be stored by the autograd engine anymore).

Thank you.

So this .backward() method is called behind the scenes in Pytorch somewhere inside when the optim.Adam method is run?

I’ve seen a few examples of neural networks with Pytorch but I don’t get where the weights are.

You can check the tutorials on how to train a neural network and what each function is doing.

I am not sure how the correlation between retain_graph=True and zero_grad() works. Have a look at this:

(code is adapted from the answer and might not be 100% correct, but I hope you get what I mean)

prediction = self(x)

self.zero_grad()

loss = self.loss(prediction, y)

loss.backward(retain_graph=True) #retains weights --> gradients?

loss.backward() ## add gradients to gradients? makes them a lot stronger?

vs:

loss.backward(retain_graph=True) #retains weights? --> gradients

self.zero_grad()

loss.backward() ## gradients are zero, how is retain_graph=True effective in this case?

or is retain_graph just keeping the weights rather than the gradients? I am a bit confused.Hi,

retain_graph has nothing to do with gradients. It just allows you to call backward a second time. If you don’t set it in the first .backward() call, you couldn’t call backward a second time.

Are you sure you get an error if you don’t use retain_graph = True?

This seems to be the normal protocol of running a model inside iterations, as the model creates a new graph every time for calculating preds.

If you could be more specific about the problem you have faced, it would be very good for me.

Thanks