RuntimeError:

undefined value hidden_t:

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/encoders/image_encoder.py”, line 143

out = torch.cat(all_outputs, 0)

return hidden_t, out, lengths

~~~~~~~~ <--- HERE

when I tried to convert Pytorch to Torchscript

full error message :

Traceback (most recent call last):

File “translate.py”, line 22, in

print(“my_module(imgr) :”,my_module(imgr))

File “/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py”, line 889, in _call_impl

result = self.forward(*input, **kwargs)

File “translate.py”, line 18, in forward

result = main(imgr)

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/bin/translate.py”, line 74, in main

return translate(opt, imgr)

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/bin/translate.py”, line 18, in translate

translator = build_translator(opt, logger=logger, report_score=True)

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/translate/translator.py”, line 29, in build_translator

fields, model, model_opt = load_test_model(opt)

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/model_builder.py”, line 112, in load_test_model

model = build_base_model(model_opt, fields, use_gpu(opt), checkpoint,

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/model_builder.py”, line 184, in build_base_model

sm = torch.jit.script(model)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_script.py”, line 942, in script

return torch.jit._recursive.create_script_module(

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py”, line 391, in create_script_module

return create_script_module_impl(nn_module, concrete_type, stubs_fn)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py”, line 448, in create_script_module_impl

script_module = torch.jit.RecursiveScriptModule._construct(cpp_module, init_fn)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_script.py”, line 391, in _construct

init_fn(script_module)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py”, line 428, in init_fn

scripted = create_script_module_impl(orig_value, sub_concrete_type, stubs_fn)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py”, line 452, in create_script_module_impl

create_methods_and_properties_from_stubs(concrete_type, method_stubs, property_stubs)

File “/opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py”, line 335, in create_methods_and_properties_from_stubs

concrete_type._create_methods_and_properties(property_defs, property_rcbs, method_defs, method_rcbs, method_defaults)

RuntimeError:

undefined value hidden_t:

File “/workspace/OpenNMT-py-1.2.0_torchscript_testfuncwork/onmt/encoders/image_encoder.py”, line 143

out = torch.cat(all_outputs, 0)

return hidden_t, out, lengths

~~~~~~~~ <--- HERE

what I tried to convert :

model.py

“”" Onmt NMT Model base class definition “”"

import torch.nn as nn

#import os

class NMTModel(nn.Module):

“”"

full_path = os.path.realpath(file)

path, filename = os.path.split(full_path)

print(path + ’ → ’ + filename + " NMTModel" + “\n”)

“”"

“”"

Core trainable object in OpenNMT. Implements a trainable interface

for a simple, generic encoder + decoder model.

Args:

encoder (onmt.encoders.EncoderBase): an encoder object

decoder (onmt.decoders.DecoderBase): a decoder object

"""

def __init__(self, encoder, decoder):

super(NMTModel, self).__init__()

self.encoder = encoder

self.decoder = decoder

def forward(self, src, tgt, lengths, bptt=False, with_align=False):

"""Forward propagate a `src` and `tgt` pair for training.

Possible initialized with a beginning decoder state.

Args:

src (Tensor): A source sequence passed to encoder.

typically for inputs this will be a padded `LongTensor`

of size ``(len, batch, features)``. However, may be an

image or other generic input depending on encoder.

tgt (LongTensor): A target sequence passed to decoder.

Size ``(tgt_len, batch, features)``.

lengths(LongTensor): The src lengths, pre-padding ``(batch,)``.

bptt (Boolean): A flag indicating if truncated bptt is set.

If reset then init_state

with_align (Boolean): A flag indicating whether output alignment,

Only valid for transformer decoder.

Returns:

(FloatTensor, dict[str, FloatTensor]):

* decoder output ``(tgt_len, batch, hidden)``

* dictionary attention dists of ``(tgt_len, batch, src_len)``

"""

print("model.py")

dec_in = tgt[:-1] # exclude last target from inputs

enc_state, memory_bank, lengths = self.encoder(src, lengths)

if bptt is False:

self.decoder.init_state(src, memory_bank, enc_state)

dec_out, attns = self.decoder(dec_in, memory_bank,

memory_lengths=lengths,

with_align=with_align)

return dec_out, attns

def update_dropout(self, dropout):

self.encoder.update_dropout(dropout)

self.decoder.update_dropout(dropout)

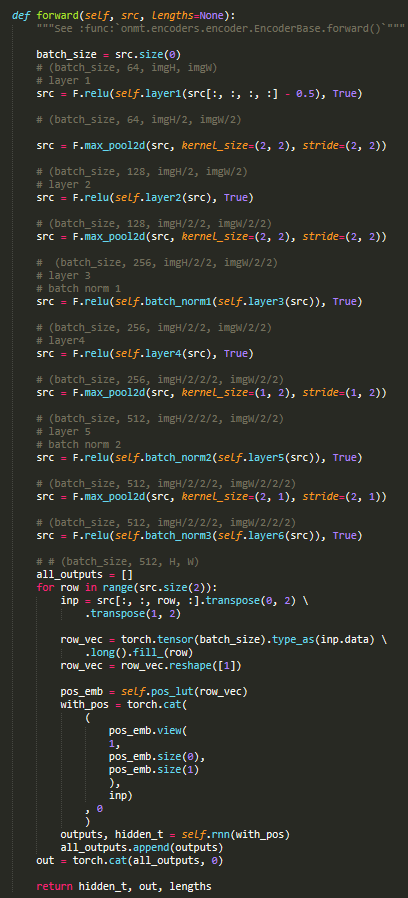

where I got Undefined value error

image_encoder.py

Can anyone help?..