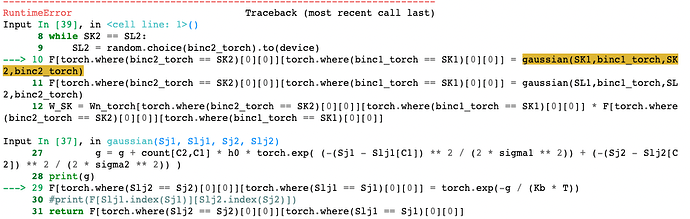

RuntimeError: dst.nbytes() >= (dst.storage_offset() * dst.element_size()) INTERNAL ASSERT FAILED at “/Users/runner/work/pytorch/pytorch/pytorch/aten/src/ATen/native/mps/operations/Copy.mm”:130, please report a bug to PyTorch.

Dear Developers,

I got an error like this, could you give me some instructions on why this happens and how I can solve it?

Best,

eqy

December 7, 2022, 11:04pm

2

In my experience the MPS backend is still relatively new and has some bugs that need to be worked out. Could you send a small code snippet that reproduces this issue on your end?

Sure, here is the code:

And here is the Gaussian Fxn I defined:

def gaussian(Sj1,Slj1,Sj2,Slj2):

sigma1 = torch.tensor(0.5)

sigma2 = torch.tensor(0.5)

Kb = torch.tensor(0.001987204)

T = torch.tensor(300)

h0 = torch.tensor(0.0001)

g = torch.tensor(0)

C1 = torch.tensor(0)

C2 = torch.tensor(0)

for i in range((torch.where(Slj2 == Sj2)[0][0] - 5),(torch.where(Slj2 == Sj2)[0][0] + 6)):

if i < 0:

C2 = i + 1000

elif i > 999:

C2 = i - 1000

else:

C2 = i

for j in range((torch.where(Slj1 == Sj1)[0][0] - 5),(torch.where(Slj2 == Sj2)[0][0] + 6)):

if j < 0:

C1 = j + 1000

elif j > 999:

C1 = j -1000

else:

C1 = j

g = g + count[C2,C1] * h0 * torch.exp( (-(Sj1 - Slj1[C1]) ** 2 / (2 * sigma1 ** 2)) + (-(Sj2 - Slj2[C2]) ** 2 / (2 * sigma2 ** 2)) )

F[torch.where(Slj2 == Sj2)[0][0]][torch.where(Slj1 == Sj1)[0][0]] = torch.exp(-g / (Kb * T))

#print(F[Slj1.index(Sj1)][Slj2.index(Sj2)])

return F[torch.where(Slj2 == Sj2)[0][0]][torch.where(Slj1 == Sj1)[0][0]]

J_Johnson

December 8, 2022, 4:00pm

4

Seems to me like you might not be using torch.where correctly. It needs 2 more parameters, x and y. See the docs here:

https://pytorch.org/docs/stable/generated/torch.where.html

oh, I really thought torch.where is the same as np.where

J_Johnson

December 8, 2022, 4:23pm

6

Corrected. I see it can be used as torch.nonzero(condition, as_tuple=True).

J_Johnson

December 8, 2022, 4:25pm

7

This should probably be raised on the Github page with a minimalist example(i.e. remove as much code as you can to still reproduce the error).

emmm, I still have the same issue after I change torch.where to torch.nonzero

J_Johnson

December 8, 2022, 5:15pm

9

Yes, I meant I stood corrected.