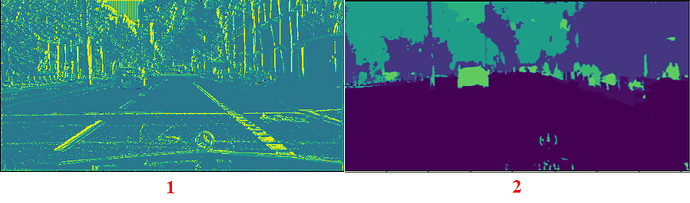

Hey guys, i am using this repo GitHub - hamdaan19/UNet-Multiclass: This repository contains code used to train U-Net on a multi-class segmentation dataset..

Evaluate function in this repo producing the second image, which is what i want.

But i need a real time function so i wrote this:

model = UNET(in_channels=3, classes=19)

model.state_dict(destination=torch.load("D:/ide/Saves/RedHorse/env/HorseNet/NN/Static/NNmodels/2_Segmentation_Model_Segmentation_model.pth", map_location="cpu"))

model.eval()

IMAGE_HEIGHT, IMAGE_WIDTH = 300, 600

x = cv2.imread("D:/ide/Saves/RedHorse/env/HorseNet/NN/extracted_data/Cityspaces.zip/images/val/frankfurt/frankfurt_000000_015389_leftImg8bit.png")

x = cv2.resize(x, (IMAGE_WIDTH,IMAGE_HEIGHT))

x = cv2.cvtColor(x, cv2.COLOR_BGR2RGB)

x = torch.from_numpy(x).float()

x = x.permute(2,0,1)

x = x.unsqueeze(0)

with torch.no_grad():

y = model(x)

y = torch.nn.functional.softmax(y, dim=1)

y = torch.argmax(y, dim=1).float()

y.apply_(lambda x: t2l[x].id)

y = y.numpy()

y = y.transpose(1,2,0)

plt.imshow(y)

plt.show()

cv2.imwrite("D:/Downloads/UNet-Multiclass/saved_images/.png",y)

and output of this code is first image. I really don’t understand is this a problem or not. If its not what should i do to get the outputs like second one?