It is here:https://github.com/AlexLuya/DSC-AI/blob/master/product/b_scan_usg/BSCANUSGClassifierTrainer.py

I think there is mix of problems;

- train and eval modes are in same for-loop

- dropout is used in model

- dropout rate is 0.0 but it shows different number

As step-by-step, removing the possibility might been necessary, I think.

@AlexLuya, were you able to figure out your problem? I’m facing a very similar scenario. .eval() and .train() result in two very different output for the same input data, therefore, their corresponding loss would be quite different.

Hi @mhajiaghayi @AlexLuya I am having the exactly same issue. I am using MNASNet from torchvision models, the logic of my code is like:

for epoch in range(num_epochs):

model.train()

train_one_epoch(model, ...) # after this, one epoch of training is finished, I need to evaluate the model using validation set now.

model.eval()

evaluate(model...)

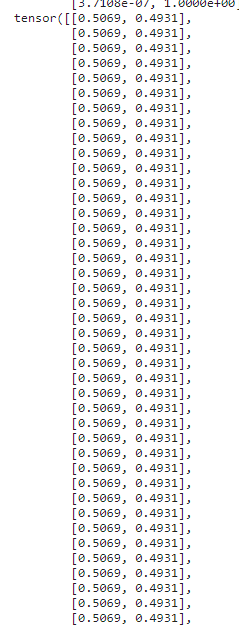

When model.eval() is there, I got the same output for every single image, see fig. 1;

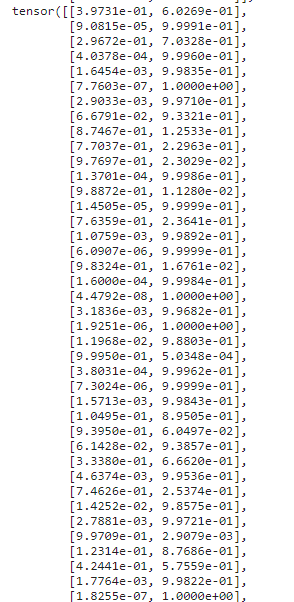

When model.eval() is commented out and all other lines of code stay unchanged, the output of each input image became the same, see fig. 2.

fig. 1 model.eval() is uncommented

fig. 2 model.eval() is commented

I am not sure whether this is something wrong with my code or there is some bug underlying. Does anyone have some idea about this?

Thanks!

I found the problem of my program now. It’s because I added a softmax before feeding the raw output to the crossentropyloss. Sorry, my bad.

I have notiecd a similar issue as well. However, if you train for more epochs, the output during evaluation will converge to the output of training.

This is Dropout by design. The whole idea behind dropout is that you won’t get the exact output for the same input during training and evaluation. In the first epochs of training, the difference will be large, but in later epochs, the model is trained to be resilient such that when different weights in its model are turned off, the output won’t change much.