The model gives silightly different results on different platforms. And the model is trained on GPU and save as .cuda() format.

Is there a way to make the result to be precisely the same?

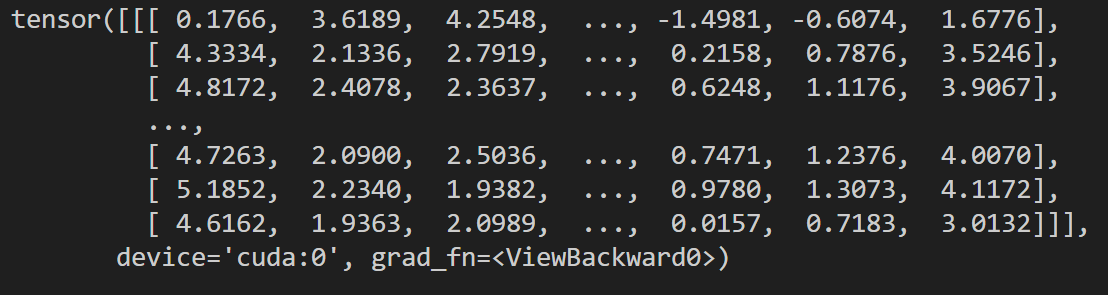

with torch 1.13.1+cu116, on Tesla A100

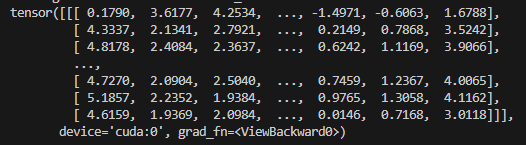

torch 1.13.1+cu116, on GTX1060

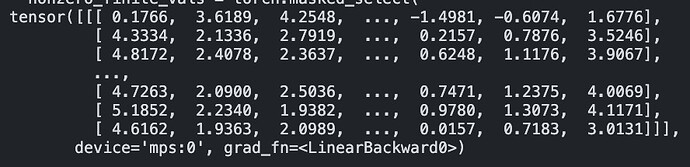

and torch 2.1.2 on MacOS mps

The test script goes like this

def test_model_consistency(self):

if(torch.cuda.is_available()):

device = torch.device("cuda")

elif(torch.backends.mps.is_available()):

device = torch.device("mps")

else:

device = torch.device("cpu")

model_path = "best.pth"

if(torch.cuda.is_available()):

model = torch.load(model_path).to(device)

else:

model = torch.load(model_path, map_location=device).to(device)

output = model(torch.zeros(1,128,6).to(device))

print(output)

And I get

There are slightly differences for the output, but I want them to be exactly the same. Is there any way to do that?