Following is the code I am working on:

def train_epoch(train_loader, model, loss_fn, optimizer, cuda, log_interval, metrics, step=None):

for metric in metrics:

metric.reset()

model.train()

losses = []

total_loss = 0

for batch_idx, ((x0, x1), y) in enumerate(train_loader):

y_true = y

x0, x1, y_true = x0.cuda(), x1.cuda(), y.cuda()

optimizer.zero_grad()

output1, output2 = model(x0, x1)

#p_dist = torch.nn.PairwiseDistance(keepdim=True)

p_dist = torch.nn.CosineSimilarity(dim=1, eps=1e-08)

dy = p_dist(output1, output2)

dy = torch.nan_to_num(dy)

y_true = torch.nan_to_num(y_true)

'''2 lines indicated the normalization of dy to 0 and 1 by dividing it with max value'''

maximum_dy = torch.max(dy)

maximum_dy = torch.nan_to_num(maximum_dy)

dy = dy / maximum_dy

maximum_y_true = torch.max(y_true)

maximum_y_true = torch.nan_to_num(maximum_y_true)

y_true = y_true / maximum_y_true

#dy = torch.squeeze(dy, 1)

input_dy = torch.empty(dy.size(0), 2)

input_dy[:, 0] = 1 - dy

input_dy[:, 1] = dy

y_true_2 = torch.zeros(dy.size(0), 2)

y_true_2[range(y_true_2.shape[0]), y_true.long()] = 1

m = nn.Sigmoid()

loss = loss_fn(m(input_dy), y_true_2)

loss.backward()

optimizer.step()

losses.append(loss.item())

total_loss += loss.item()

input_dy_metric = torch.round(input_dy)

for metric in metrics:

metric(input_dy_metric, y_true_2)

metric.total += y_true_2.shape[0]

if batch_idx % log_interval == 0:

message = 'Train: [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

batch_idx, len(train_loader),

100. * batch_idx / len(train_loader), np.mean(losses))

for metric in metrics:

message += '\t{}: {}'.format(metric.name(), metric.value())

losses = []

total_loss /= (batch_idx + 1)

return total_loss, metrics

def test_epoch(val_loader, model, loss_fn, cuda, metrics, log_interval):

with torch.no_grad():

for metric in metrics:

metric.reset()

model.eval()

val_loss = 0

losses = []

for batch_idx, ((x0, x1), label) in enumerate(val_loader):

x0, x1, y_true = x0.cuda(), x1.cuda(), label.cuda()

output1, output2 = model(x0, x1)

#p_dist = torch.nn.PairwiseDistance(keepdim=True)

p_dist = torch.nn.CosineSimilarity(dim=1, eps=1e-08)

dy = p_dist(output1, output2)

dy = torch.nan_to_num(dy)

y_true = torch.nan_to_num(y_true)

'''2 lines indicated the normalization of dy to 0 and 1 by dividing it with max value'''

maximum_dy = torch.max(dy)

maximum_dy = torch.nan_to_num(maximum_dy)

dy = dy / maximum_dy

maximum_y_true = torch.max(y_true)

maximum_y_true = torch.nan_to_num(maximum_y_true)

y_true = y_true / maximum_y_true

#dy = torch.squeeze(dy, 1)

'Output tensor of dimension [4,2] and input tensor of dimension [4,2] to BCE loss function'

input_dy = torch.empty(dy.size(0), 2)

input_dy[:, 0] = 1 - dy

input_dy[:, 1] = dy

y_true_2 = torch.zeros(dy.size(0), 2)

y_true_2[range(y_true_2.shape[0]), y_true.long()] = 1

m = nn.Sigmoid()

loss = loss_fn(m(input_dy), y_true_2)

losses.append(loss.item())

val_loss += loss.item()

input_dy_metric = torch.round(input_dy)

for metric in metrics:

metric(input_dy_metric, y_true_2)

metric.total += y_true_2.shape[0]

if batch_idx % log_interval == 0:

message = 'Test: [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

batch_idx, len(val_loader),

100. * batch_idx / len(val_loader), np.mean(losses))

for metric in metrics:

message += '\t{}: {}'.format(metric.name(), metric.value())

#print(message)

losses = []

val_loss /= (batch_idx + 1)

return val_loss, metrics

loss_fn = torch.nn.BCELoss()

#loss_fn = torch.nn.ContrastiveLoss(pos_margin=0, neg_margin=1)

lr = 1e-4

optimizer = optim.Adam(model.parameters(), lr=lr)

scheduler = lr_scheduler.StepLR(optimizer, 8, gamma=0.1, last_epoch=-1)

n_epochs = 80

log_interval = 1

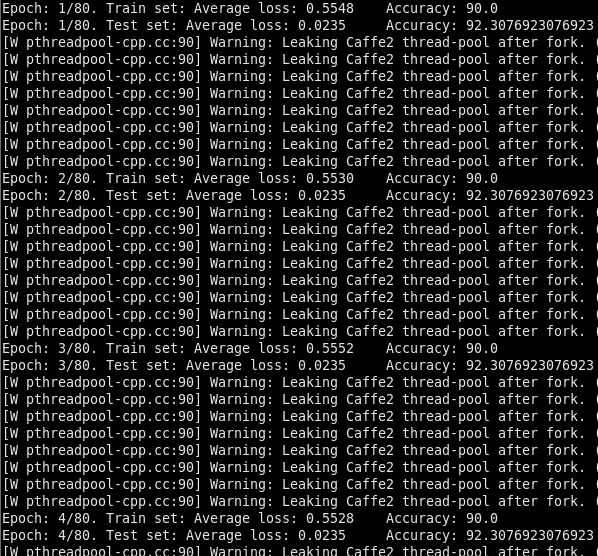

fit(train_loader, test_loader, model, loss_fn, optimizer, scheduler, n_epochs, cuda, log_interval, [metrics.Binary_accuracy()])

Fit function:

def fit(train_loader, val_loader, model, loss_fn, optimizer, scheduler, n_epochs, cuda, log_interval, metrics,

start_epoch=0, message=None) -> object:

for epoch in range(0, start_epoch):

scheduler.step()

for epoch in range(start_epoch, n_epochs):

scheduler.step()

# Train stage

train_loss, metrics = train_epoch(train_loader, model, loss_fn, optimizer, cuda, log_interval, metrics)

message = 'Epoch: {}/{}. Train set: Average loss: {:.4f}'.format(epoch + 1, n_epochs, train_loss)

for metric in metrics:

message += '\t{}: {}'.format(metric.name(), metric.value())

val_loss, metrics = test_epoch(val_loader, model, loss_fn, cuda, metrics, log_interval)

val_loss /= len(val_loader)

message += '\nEpoch: {}/{}. Test set: Average loss: {:.4f}'.format(epoch + 1, n_epochs,

val_loss)

for metric in metrics:

message += '\t{}: {}'.format(metric.name(), metric.value())

print(message)