Hello, I am working on transfer learning, trying to reduce the number of samples to use in the target training set.

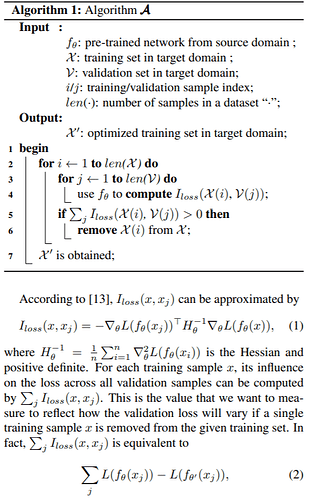

To do so, I am reimplementing the following algorithm, taken from the paper “Instance Based Deep Transfer Learning”, 2018, Wang et Al.:

Basically, for each training sample of the target, you compute the jacobian (gradient) of the loss w.r.t. all the parameters and multiply it by the Hessian of the loss function (averaged for all the samples of the training) and by all the gradients of the loss when the net is fed with a validation sample, accumulating them. If the final result is positive, you discard that sample.

My implementation is as follows:

def instance_selection(model, X_train, y_train, X_valid, y_valid, criterion):

# Computation of Hessian

print('Computing Hessian matrix...', end='')

hessian_matrix = compute_hessian(model, X_train, y_train, criterion)

print('done!')

selected_indices = []

for i, train_sample in enumerate(tqdm(X_train, desc='Instance selection')):

# Computing jacobian of the loss in the training sample

model.zero_grad()

train_sample = np.expand_dims(train_sample, axis=0)

train_sample = torch.Tensor(train_sample)

label = y_train[i]

output = model(train_sample)

label = torch.LongTensor([label])

loss = criterion(output, label)

# This step will populate the parameters of the network with the gradients

loss.backward()

# Iteration on the parameters of the network to get all the gradients

jacobian_i = []

for param in model.parameters():

jacobian_i.append(param.grad.view(-1).cpu().data.numpy())

jacobian_i = np.concatenate(jacobian_i).ravel()

# Computing intermediate product between Hessian and Jacobian of training

sample

intermediate = np.matmul(hessian_matrix, np.transpose(jacobian_i))

j_loss = 0

for j, valid_sample in enumerate(X_valid):

# Computing jacobian of the loss in the validation sample

model.zero_grad()

valid_sample = np.expand_dims(valid_sample, axis=0)

valid_sample = torch.Tensor(valid_sample)

label = y_valid[j]

output = model(valid_sample)

label = torch.LongTensor([label])

loss = criterion(output, label)

loss.backward()

jacobian_j = []

for param in model.parameters():

jacobian_j.append(param.grad.view(-1).cpu().data.numpy())

jacobian_j = np.concatenate(jacobian_j).ravel()

# Computing the multiplication between the jacobian of the validation sample

and the intermediate matrix

j_loss += np.matmul((jacobian_j*(-1)), intermediate)

# If j_loss is negative, I won't keep the sample in the training set

if j_loss <= 0:

selected_indices.append(i)

return selected_indices

Is there anyone who has already done a similar thing or can give me a feedback whether the implementation is correct?

Thanks a lot!