Hi,

I am trying to do a sanity check to see whether my model is training correctly or not by overfitting on a small dataset.

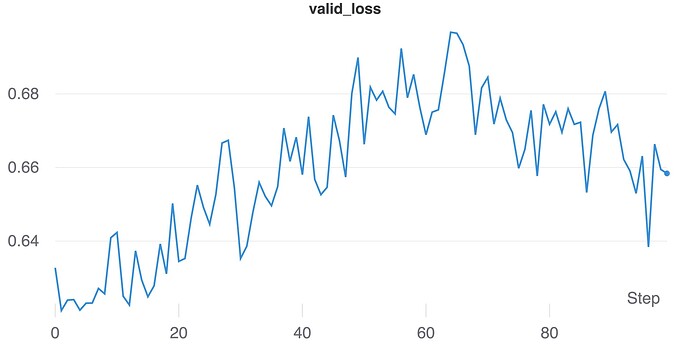

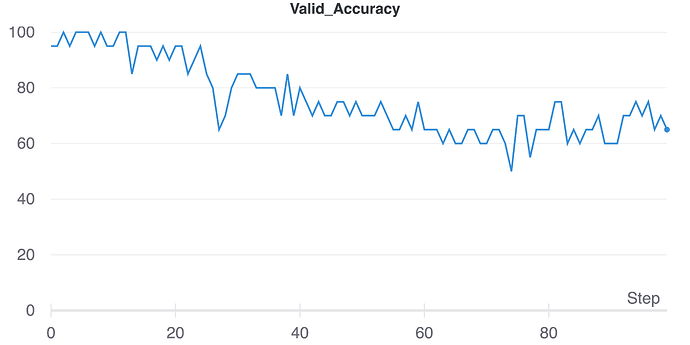

The results are a little bit different from what I expected. The loss is not abnormally very small and accuracy is decreasing with time. Is this an intended result to expect on overfitting on a small batch or whether there is some bug in my training?

Below are my graphs for valid loss and valid accuracy, samples of which are same as of training set-

You might want to check that the data augmentations are the same across the training and validation set. Beyond that you might want to go down to just a single example first to check that the examples are indeed identical.

1 Like

Okay, I just turned off every augmentation and checked each sample. They are same in both training and validation set.

So there is a bug in my training loop, right? because accuracy don’t have to decline after every iteration and loss has to decrease?

That seems to be the case; you might want to see if using a simple training loop with a very simple model first gets the expected behavior.

1 Like