Hi all, I am reading the this tutorial (Saving and loading models across devices in PyTorch — PyTorch Tutorials 2.0.0+cu117 documentation).

I am writing some test code to perform Save on GPU, Load on CPU

Here is the code and torch version is 2.0.0

import torch

import torch.nn as nn

import torch.optim as optim

class ToyModel(nn.Module):

def __init__(self):

super(ToyModel, self).__init__()

self.net1 = nn.Linear(10, 10)

self.relu = nn.ReLU()

self.net2 = nn.Linear(10, 1)

def forward(self, x):

return self.net2(self.relu(self.net1(x)))

device = "cuda:0"

model = ToyModel()

model = model.to(device)

loss_fn = nn.MSELoss()

optimizer = optim.SGD(model.parameters(),lr=0.001)

epoch = 10

for _ in range(epoch):

optimizer.zero_grad()

data = torch.randn((20, 10)).to(device)

outputs = model(data)

labels = torch.randn(20,1).to(device)

loss = loss_fn(outputs,labels)

print(loss)

loss.backward()

optimizer.step()

torch.save(model.state_dict(),"test.pt")

print("finish")

new_model = ToyModel()

new_model.load_state_dict(torch.load("test.pt"))

for k,v in new_model.state_dict().items():

print(k,v)

I trained a toy model on GPU, save it and then load it on CPU, and I did not set the map_location parameter, but the code still work well.

So dose my test code meet the sense “Save on GPU, Load on CPU”?

if so, why the code works well even I did not set the map_location when load it on CPU.

if not, what does the “Save on GPU” exactly means?

if you dont specify the map_location, then it tries to load on the same device in which the model was saved. i.e. in case of multi gpu setup if you trained on cuda:1 and saved it then if you load without map_location then it will load by default to cuda:1.

As for your experiment, I think it is being loaded into cuda:0. You can check it by looking at the nvidia-smi result. Comment the save part and run only the load part of the load and monitor nvidia-smi. If the code occupies space in the gpu then the model is being loaded in the gpu.

Thanks for your answer.

yes, I add some test as you described (only run the load part) and found the code indeed occupies space in cuda:0. But my question is that my new_model is on CPU and it loads the parameter from “test.pt”, and new_model.parameters() shows that all parameters tensor of new_model are on CPU, which means there are two copy of the parameter tensors in “test.pt” after load? one in cuda:0 and one in gpu, and the one in cuda:0 is never used?

Did you checked the parameters after loading the model or before loading the model?.

It may be the case that you did .cuda somewhere down the code and the model is showing it in cpu above and nvidia-smi is showing that gpu is being used because of the lower section of the code.

please share your code so that i can review it properly.

Practically, I use .to(device) or .cuda() after loading the model even thought the model is already in gpu just in case to avoid any complications.

here is the code only run the load part

def load_test():

new_model = ToyModel()

for k, v in new_model.state_dict().items():

print(k, v)

load_result = torch.load("test.pt")

print(load_result)

new_model.load_state_dict(torch.load("test.pt"))

print("finish load")

time.sleep(15)

for k,v in new_model.state_dict().items():

print(k,v)

data = torch.randn((20, 10))

print(new_model(data))

data = data.to("cuda:0")

print(new_model(data))

I am sure the model is on cpu all the time. the ToyModel class is as defined in my question post and the same with test.pt. and at the end it will report a Error

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cpu and cuda:0! (when checking argument for argument mat1 in method wrapper_CUDA_addmm)

I think the model.load_state_dict(ordered_dict) will auto copy the tensors in ordered_dict to the proper device according to the model’s device when load across devices and the map_location parameter will save the unnecessary space when

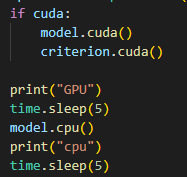

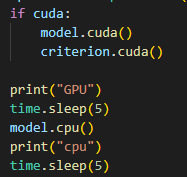

I run a small test.

Once you load the model to the gpu, and then move the model to cpu. The GPU is not instantly freed of the space. I think the pytorch by default is reserving the gpu memory (reserving for possible future assignment but not using it).

From code prospective, once you move the model to CPU, it is in cpu and compute in cpu.

As for the error, if you move the data to cuda:0 then move the model to cuda:0 as well then there will be no problem. The error is caused due to data being in cuda and model being in cpu.