Hi community,

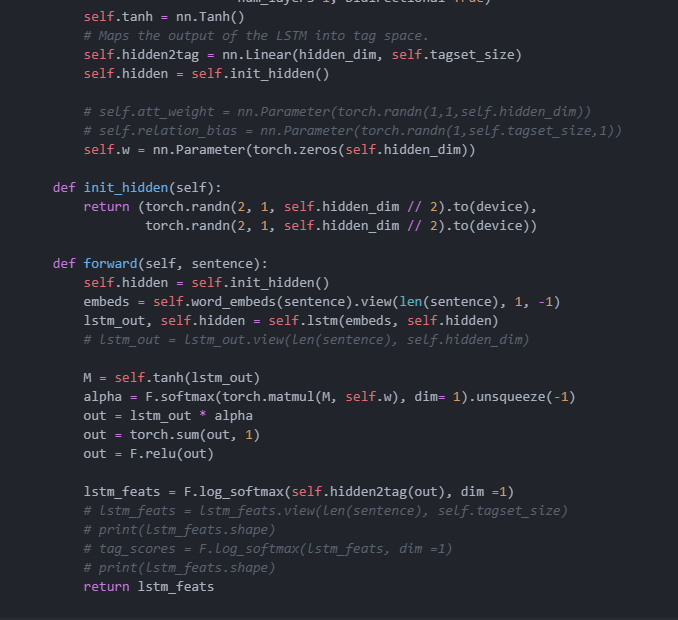

I am working on a bi-lstm+ attention NER model and I am following this paper: Long Short-Term Memory-Networks for Machine Reading (https://arxiv.org/pdf/1601.06733.pdf)

I just wonder am I implmenting correctly? And the performance is not improving the model, what else I should change? Thanks