Hallo again.

I studied your tutoril. I can say it’s helpful enough.

I made think I have make some progress with my problem.

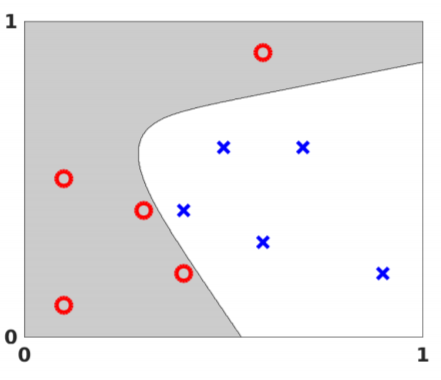

Here’s my model I built, I have a NN with 2 hidden layers and I want to use the sigmoid for my prediction. I have created the dataset with the points and the class 0 or 1 but I have come to a dead end.

Can you help please help me? Whats wrong? I can’t understand why the AttributeError: ‘data’ object has no attribute ‘len’ come on.

Also do you think that my code is close enough to the solution or I have messed up? Thank you in advance!

import torch

import numpy as np

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import Dataset, DataLoader

class data():

def __init__(self):

x=np.array([[0.1,0.1], [0.3,0.4] , [0.1,0.5] , [0.6,0.9] , [0.4,0.2] , [0.6,0.3] , [0.5,0.6] , [0.9,0.2] , [0.4,0.4] ,[0.7,0.6]])

y=np.array([1,1,1,1,1,0,0,0,0,0])

self.y = torch.from_numpy(y).type(torch.LongTensor)

self.x = torch.from_numpy(x).type(torch.FloatTensor)

def __getitem__(self, index):

return self.x[index], self.y[index]

def __len__(self):

return self.len

class Net(nn.Module):

def __init__(self, D_in=2, H1=2, H2=3, D_out=2):

super(Net, self).__init__()

self.linear1 = nn.Linear(D_in, H1)

self.linear2 = nn.Linear(H1, H2)

self.linear3 = nn.Linear(H2, D_out)

# Prediction

def forward(self, x):

x = torch.sigmoid(self.linear1(x))

x = torch.sigmoid(self.linear2(x))

x = torch.sigmoid(self.linear3(x))

return x

def train(data_set, model, criterion, train_loader, optimizer, epochs=100):

LOSS = []

ACC = []

for epoch in range(epochs):

for x, y in train_loader:

optimizer.zero_grad()

yhat = model(x)

loss = criterion(yhat, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

LOSS.append(loss.item())

ACC.append(accuracy(model, data_set))

return LOSS

data_set=data()

model = Net()

learning_rate = 0.10

optimizer = torch.optim.SGD(model.parameters() ,lr=learning_rate)

train_loader = DataLoader(dataset=data_set, batch_size=1)

criterion = nn.MSELoss()

LOSS = train(data_set, model, criterion, train_loader, optimizer, epochs=100)

print('arrived here')