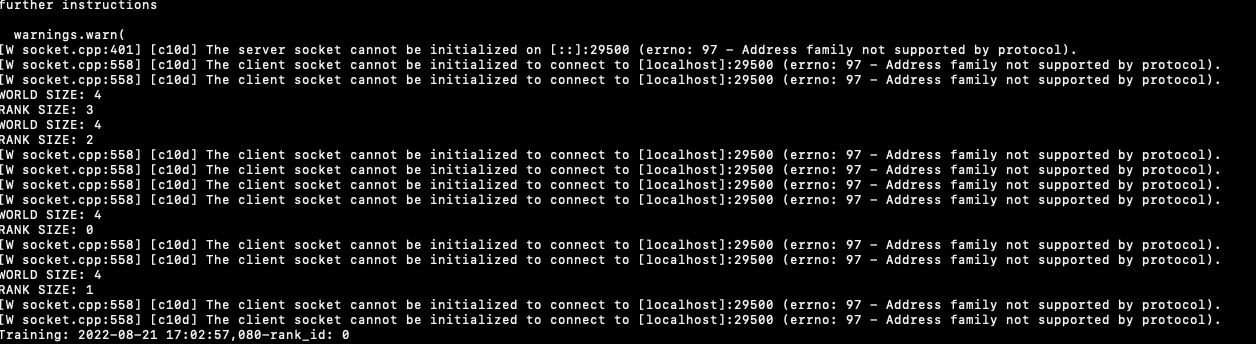

Why would I get error message like this? I am seeting master add to local host and letting master port to be default? I am using one-node 4 gpu system. Any help will be appreciated.

python -m torch.distributed.launch --nproc_per_node=4 --nnodes=1 --node_rank=0 --master_addr="localhost" train.py configs/check.py