I found that even if I set all the seed and cudnn, I still can not get deterministic result. The seed and cudnn settings are:

random.seed(args.seed)

np.random.seed(args.seed)

torch.manual_seed(args.seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(args.seed)

torch.cuda.manual_seed_all(args.seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

I use following settings:

FloatTensor as default Tensor.

CUDA version is 9.0.176.

cudnn version is 7102.

Pytorch version is 0.4.1.post2.

GPU is GTX1080.

Python version is 3.5.6.

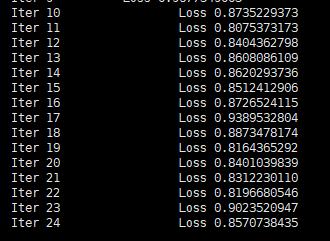

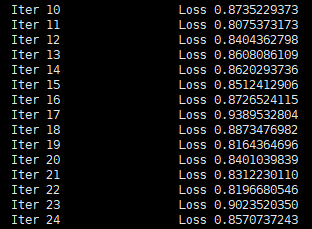

I ran a nlp task and the loss between two runs:

The loss of these two runs between Iter 0~17 are the same, however, in Iter 18, one is 0.8873478174 and another is 0.8873476982.

Since this is a nlp task, I believe that there is no randomness in the data preprocessing porcess. I have checked the dataloader and set ‘num_workers’ as default setting. I also check the data of these two runs and they are the same.

I really want to know the reason why this happen. I’ve been dealing with the problem for few weeks. It totally drives me crazy.

I also searched for some related discussion, and I found that a topic discussed that this issue is caused by FloatTensor and need to switch to DoubleTensor. If it is true, is there any way to get deterministic result using FloatTensor?

I really appreciate it if someone could discuss with me.